ML & AI

Master Machine Learning & Artificial Intelligence: Unlock the Power of Data and Automation

Step into the world of Machine Learning (ML) and Artificial Intelligence (AI) with our in-depth courses designed for all skill levels. Learn the key concepts, algorithms, and techniques behind ML and AI, and how they’re transforming industries such as healthcare, finance, and technology. From supervised and unsupervised learning to deep learning and neural networks, our courses provide hands-on experience with the latest tools and frameworks. Whether you’re a beginner or an advanced professional, develop the expertise to build intelligent systems and drive innovation with ML & AI.

Key Assumptions for Linear Regression: Ensuring Model Validity

Understanding the Assumptions of Linear Regression Linear regression is a powerful statistical technique used for modeling the relationship between a dependent variable and one or more independent variables. While the model can be highly effective for making predictions, its validity and accuracy depend on certain assumptions being met. These assumptions ensure that the model fits…

Understanding Linear Regression and Key Metrics: P-Value, Coefficients, and R-Squared

What is Linear Regression? Linear regression is one of the simplest and most widely used statistical methods in predictive modeling. It is a technique used to understand the relationship between a dependent variable (also called the target or output) and one or more independent variables (also known as predictors or features). The goal is to…

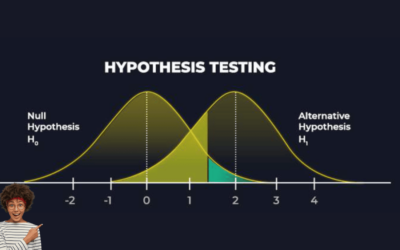

Understanding Type I and Type II Errors in Hypothesis Testing

What are Type I and Type II Errors? In statistical hypothesis testing, researchers use tests to make inferences about a population based on sample data. However, as with any process of decision-making, mistakes can occur. Two common types of errors in hypothesis testing are Type I and Type II errors. Understanding these errors is crucial…

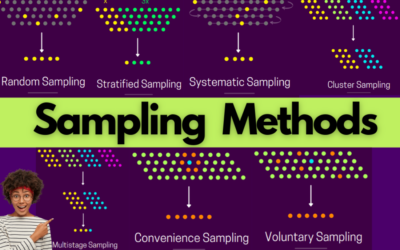

Understanding Data Sampling: Methods, Techniques, and Applications

What is Sampling? Data sampling is a critical statistical analysis technique used in various fields to efficiently analyze and interpret large data sets. It involves selecting a representative subset of data points from a larger population or dataset. The goal is to identify patterns, trends, and insights that reflect the characteristics of the entire…

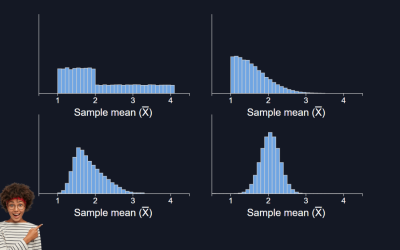

Understanding the Central Limit Theorem: Importance, Applications, and Calculations

Introduction The Central Limit Theorem (CLT) is one of the cornerstones of statistical theory and a powerful concept in probability. This theorem explains why the normal distribution appears so often in real-world data, even when the original data doesn’t follow a normal distribution. Understanding CLT is crucial for statisticians, data analysts, and anyone working with…

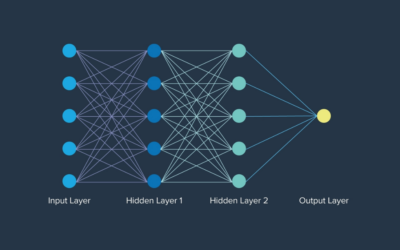

How LLMs Work: Step-by-Step Explanation

What is a large language model (LLM)? Large Language Models are machine learning models that employ Artificial Neural Networks and large data repositories to power Natural Language Processing (NLP) applications. An LLM serves as a type of AI model designed to be able to grasp, create, and manipulate natural language. These models rely on deep…

DeepSeek: A Game Changer in the AI Landscape

The introduction of DeepSeek has triggered a paradigm shift in the AI landscape. Its ability to outperform existing technologies at a fraction of the cost has sent ripples throughout the tech industry.

How a Large Language Model (LLM) predicts the next word

How a Large Language Model (LLM) predicts the next word, including all the mathematical operations involved at each step, with the appropriate vector and tensor manipulations.

The Ultimate Guide to Fine-Tuning LLMs from Basics to Breakthroughs

Key Concepts Explained Large Language Models (LLMs): – LLMs are sophisticated AI systems designed to understand and generate human language. They are trained on vast amounts of text data, learning the structure and nuances of language, enabling them to perform tasks like translation, summarization, and conversation. Fine-Tuning vs. Pre-Training: – Pre-Training: In this…

Prompt Engineering

Prompt Engineering Guide Prompt engineering is a relatively new discipline for developing and optimizing prompts to efficiently use language models (LMs) for a wide variety of applications and research topics. Prompt engineering skills help to better understand the capabilities and limitations of large language models (LLMs). Researchers use prompt engineering to improve the capacity of…