Grow Your Business

3$/= Month

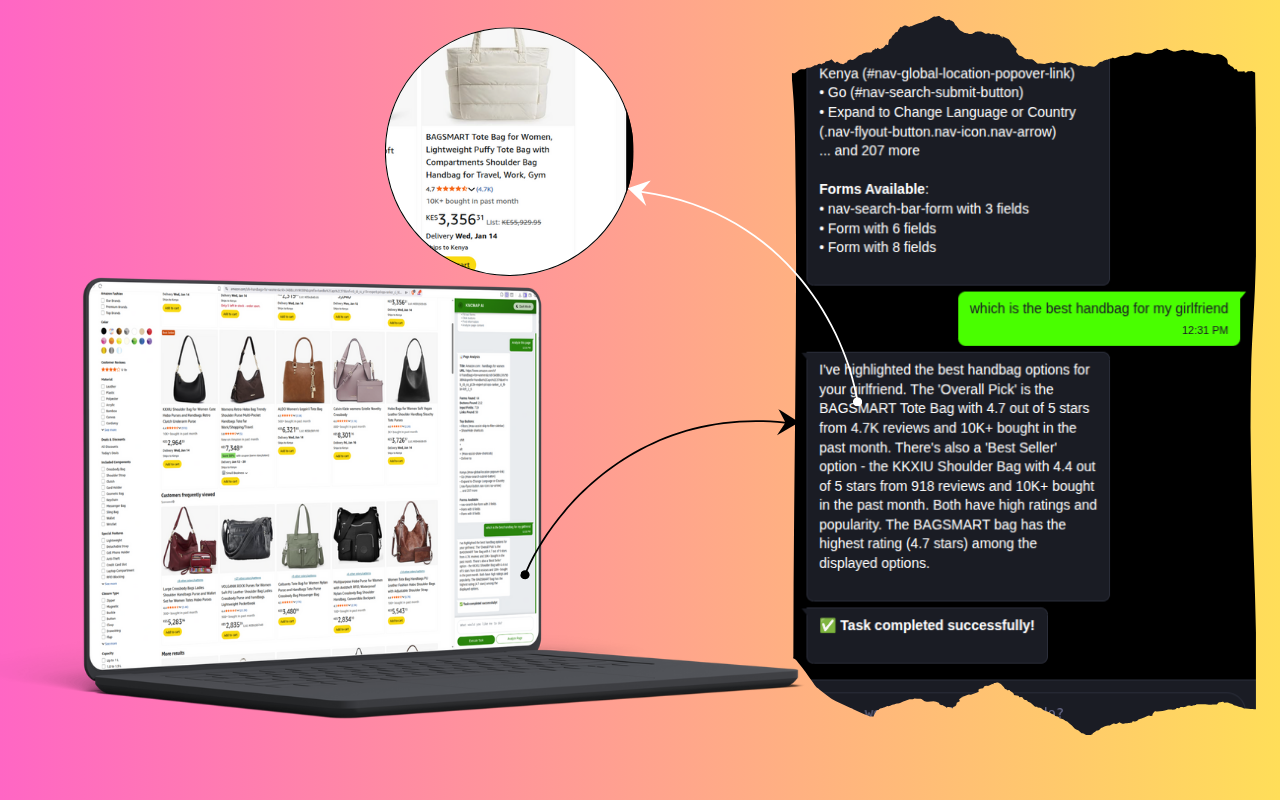

Access everything you need in one dashboard - AI Assistant, Chat, URL Shortener, Document Converter, Mailer, and more. Manage files, automate tasks, track product links and performance, and scale your business faster with smart, easy-to-use tools.