Artificial Neural Networks (ANNs) are one of the most important advancements in the field of machine learning, inspired by the biological neural networks in the human brain. With their ability to solve complex pattern recognition problems, ANNs are the foundation of many modern technologies such as image classification, speech recognition, self-driving cars, and natural language processing. This article will provide an in-depth introduction to Artificial Neural Networks, covering their working principles, types, applications, and the advantages and limitations of using ANNs.

What is an Artificial Neural Network (ANN)?

An Artificial Neural Network (ANN) is an information-processing system that mimics the way biological neural systems (like the human brain) work. It consists of layers of interconnected neurons that process and analyze data to identify patterns, make predictions, or classify data. At the core of every ANN is its ability to learn by adjusting the weights of connections between neurons. This ability to adapt through learning makes ANNs extremely versatile in solving complex tasks that would otherwise require human-level reasoning or manual feature extraction.

ANNs can be used to solve various types of problems such as classification, regression, and pattern recognition. They are particularly useful for complex problems like image and speech recognition, medical diagnosis, and financial predictions.

Structure of an Artificial Neural Network

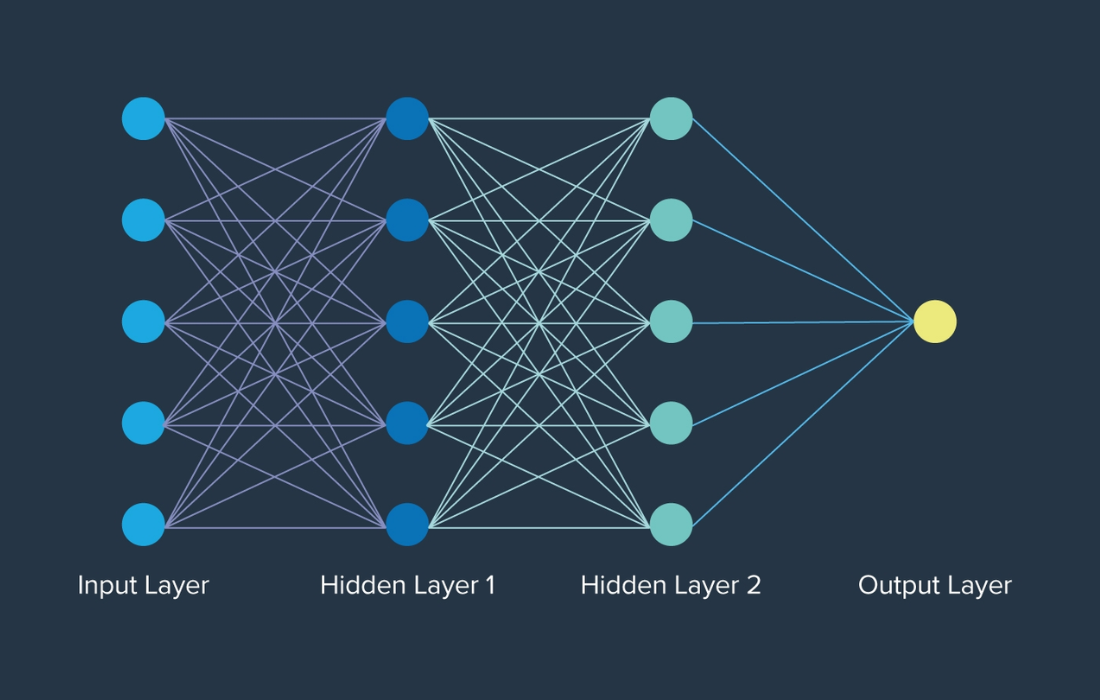

An Artificial Neural Network is composed of neurons, which are organized into layers. Each neuron processes information and communicates with others through connections, each of which has an associated weight. Here’s an overview of the key components:

- Input Layer: The input layer consists of neurons that receive data. This data could represent anything from pixel values in an image to time-series data or sensor readings.

- Hidden Layer(s): These are intermediate layers between the input and output layers. Neurons in hidden layers perform computations by applying weights to the incoming signals, passing the result through an activation function.

- Output Layer: The final layer produces the network’s predictions or classifications based on the processed information. In a classification task, for example, the output layer might have one neuron for each class, and the neuron with the highest output would indicate the predicted class.

- Weights and Biases: Each connection between neurons is associated with a weight, and each neuron has a bias. These weights and biases are adjusted during training to minimize errors and improve the network’s performance.

- Activation Function: The activation function is used to introduce non-linearity into the network, allowing it to learn more complex patterns. Common activation functions include ReLU (Rectified Linear Unit), sigmoid, and tanh.

How Do Neural Networks Work?

The core idea behind ANNs is that they learn by adjusting the weights of connections between neurons in order to make accurate predictions. This process is called training, and it usually involves the following steps:

- Forward Propagation: When the model is given an input, the data passes through the network from the input layer, through the hidden layers, and finally to the output layer. In each layer, the weighted inputs are summed, and the result is passed through the activation function to determine the output of each neuron.

- Loss Calculation: After the network produces an output, the error or difference between the predicted output and the true output (ground truth) is calculated using a loss function. Common loss functions include mean squared error (MSE) for regression problems and cross-entropy for classification tasks.

- Backpropagation: The error is propagated back through the network from the output layer to the input layer using an algorithm called backpropagation. During this process, the weights of the network are adjusted to reduce the error, typically using an optimization technique like gradient descent.

- Weight Update: The weights and biases are updated to minimize the loss, allowing the network to make more accurate predictions over time. This process continues iteratively until the network achieves a satisfactory level of accuracy.

Types of Neural Networks

Neural Networks come in various types, each suited to different types of problems. The two most common types are feedforward neural networks and feedback (recurrent) neural networks:

1. Feedforward Neural Networks (FFNN)

A Feedforward Neural Network is the simplest type of neural network. It does not have any cycles or loops, and data flows in one direction—from the input layer to the output layer, passing through hidden layers. Each neuron in one layer is connected to every neuron in the next layer.

- Example: The Multilayer Perceptron (MLP) is a type of feedforward neural network that consists of multiple layers of neurons.

2. Feedback (Recurrent) Neural Networks (RNNs)

Unlike feedforward networks, Recurrent Neural Networks (RNNs) have loops or cycles. This means that information can be passed back from the output layer to the input layer, allowing the network to remember previous inputs. RNNs are useful for problems that involve sequential data, such as speech recognition, time series forecasting, and machine translation.

- Example: Long Short-Term Memory (LSTM) networks are a special type of RNN designed to capture long-term dependencies in sequential data.

Popular Neural Network Models

Over the years, several neural network models have been developed. Some of the most important ones include:

1. McCulloch-Pitts Model

The first artificial neuron model was created by Warren McCulloch and Walter Pitts in 1943. This model represented a binary neuron that takes inputs, processes them, and produces an output based on a threshold function.

2. Perceptron

The Perceptron, developed by Frank Rosenblatt in 1958, is one of the simplest neural networks. It consists of a single-layer neural network used for binary classification. The perceptron learns by adjusting weights to minimize errors, and it served as the foundation for more complex models.

3. Backpropagation Network (BPN)

The Backpropagation Network is one of the most important developments in neural networks. It involves the backpropagation algorithm, which adjusts the weights in the network by calculating the error gradients and optimizing the weights to reduce the error.

4. Radial Basis Function Networks (RBFN)

RBFNs use radial basis functions as activation functions and have a single hidden layer. These networks are primarily used for function approximation and classification tasks.

Advantages and Disadvantages of ANN

Advantages:

- Non-linearity: ANNs can solve complex, non-linear problems that are difficult for traditional algorithms.

- Adaptability: Neural networks can learn from data and adjust weights over time to improve performance.

- Generalization: ANNs can classify patterns on unseen data, making them effective in real-world applications.

- Fault tolerance: ANNs are robust to noise and missing data in the input.

Disadvantages:

- Black-box nature: ANNs are often seen as a “black-box” because they lack interpretability. Understanding how an ANN makes decisions is often difficult.

- Data requirements: ANNs require large datasets to train effectively, and performance may degrade with insufficient data.

- Computational cost: Training ANNs, especially deep networks, requires significant computational resources, often involving GPUs.

Use Cases of Artificial Neural Networks

ANNs are used in various applications across industries, demonstrating their versatility and power. Some of the most common use cases include:

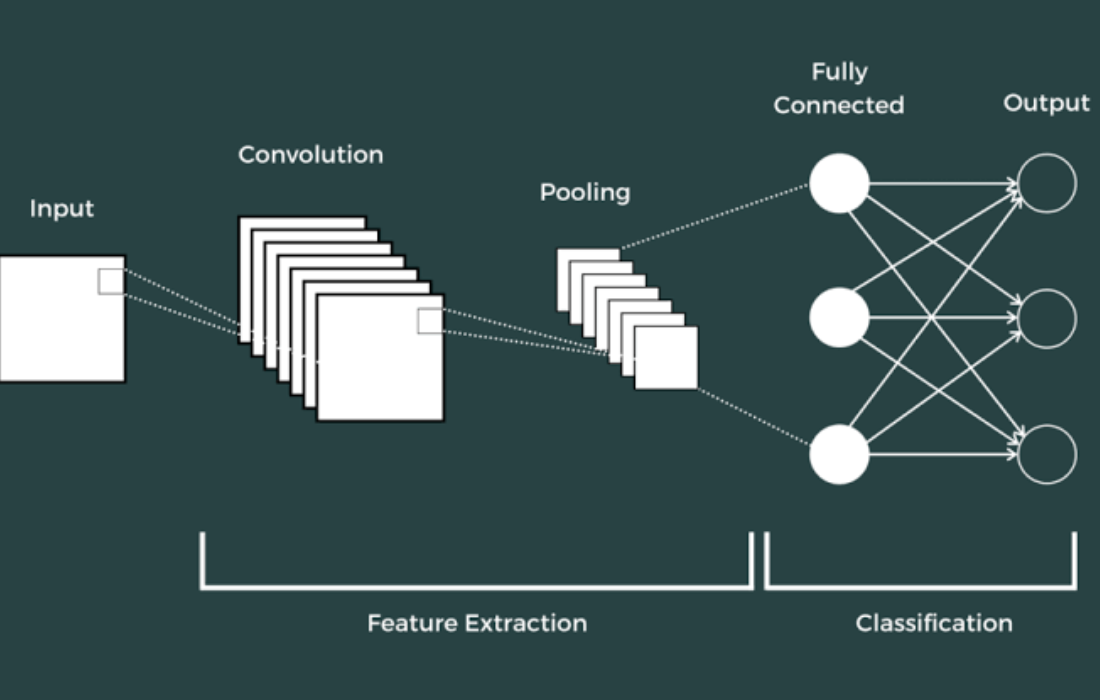

1. Computer Vision

ANNs are widely used for image recognition, object detection, face recognition, and image classification tasks. They are the backbone of applications such as self-driving cars and security surveillance systems.

2. Natural Language Processing (NLP)

ANNs, especially Recurrent Neural Networks (RNNs) and Transformers, are used in NLP tasks like text classification, machine translation, text summarization, and named entity recognition (NER).

3. Time Series Forecasting

ANNs can handle sequential data and are effective in predicting future values based on historical data. This includes stock price prediction, sales forecasting, and weather prediction.

4. Anomaly Detection

ANNs are used to detect unusual patterns or outliers in data, making them useful for fraud detection, network security, and medical diagnostics.

Conclusion

Artificial Neural Networks (ANNs) are a powerful tool for solving complex data problems and are the foundation of many modern machine learning systems. While they require large datasets and significant computational resources for training, their ability to handle non-linear relationships and adapt to new data makes them invaluable for tasks such as image classification, speech recognition, and time-series forecasting. Despite their “black-box” nature, ANNs continue to evolve and impact industries ranging from healthcare and finance to entertainment and autonomous vehicles.