A Deep Dive into Modern Vision Architectures: ViTs, Mamba Layers, STORM, SigLIP, and Qwen

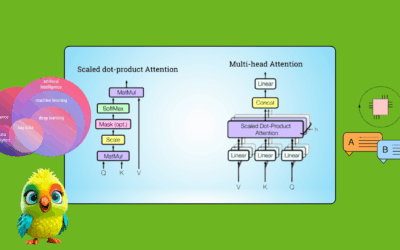

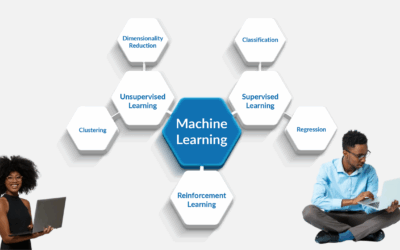

Introduction As the AI landscape rapidly evolves, vision architectures are undergoing a revolution. We’ve moved beyond CNNs into the age of Vision Transformers (ViTs), hybrid systems like SigLIP, long-sequence models such as Mamba, and powerful multimodal models like Qwen-VL. Then there’s STORM—a new architecture combining selective attention, token reduction, and memory. This blog walks you…