ML & AI

Master Machine Learning & Artificial Intelligence: Unlock the Power of Data and Automation

Step into the world of Machine Learning (ML) and Artificial Intelligence (AI) with our in-depth courses designed for all skill levels. Learn the key concepts, algorithms, and techniques behind ML and AI, and how they’re transforming industries such as healthcare, finance, and technology. From supervised and unsupervised learning to deep learning and neural networks, our courses provide hands-on experience with the latest tools and frameworks. Whether you’re a beginner or an advanced professional, develop the expertise to build intelligent systems and drive innovation with ML & AI.

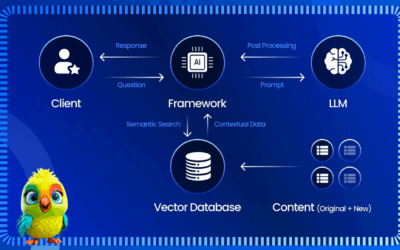

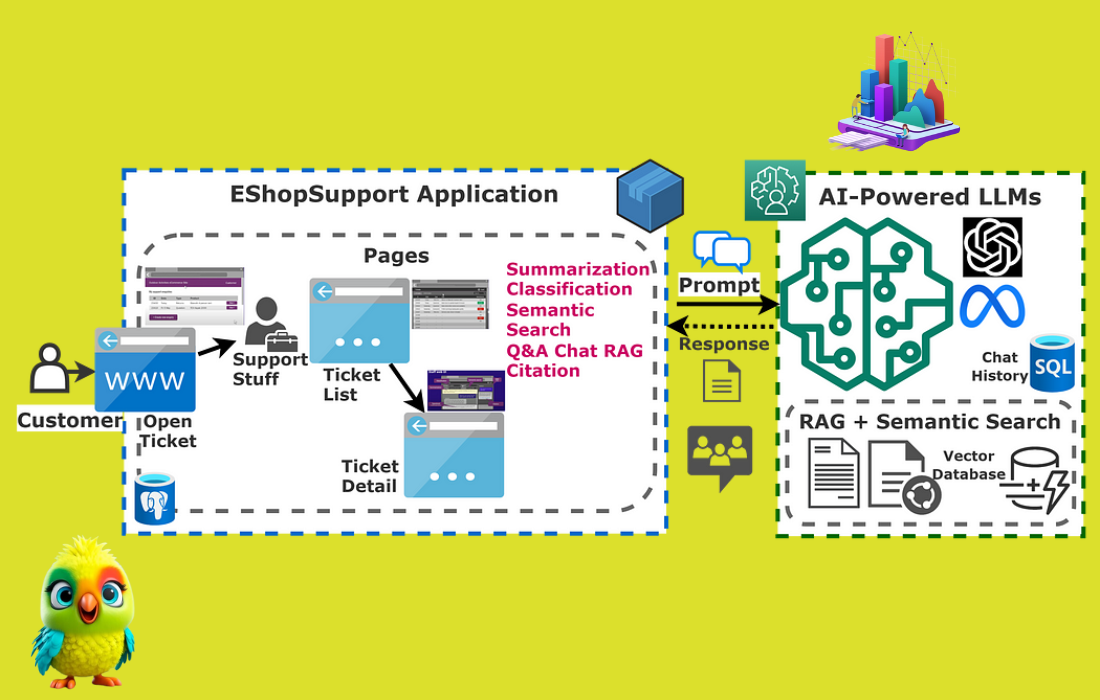

Retrieval-Augmented Generation (RAG) enhances LLM text generation using external knowledge

Retrieval-Augmented Generation (RAG) enhances LLM text generation by incorporating external knowledge sources, making responses more accurate, relevant, and up-to-date. RAG combines an information retrieval component with a text generation model, allowing the LLM to access and process information from external databases before generating text. This approach addresses challenges like domain knowledge gaps, factuality issues, and hallucinations often…

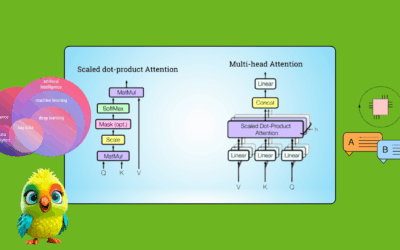

Understanding Transformers: The Mathematical Foundations of Large Language Models

In recent years, two major breakthroughs have revolutionized the field of Large Language Models (LLMs): 1. 2017: The publication of Google’s seminal paper, (https://arxiv.org/abs/1706.03762) by Vaswani et al., which introduced the Transformer architecture – a neural network that fundamentally changed Natural Language Processing (NLP). 2. 2022: The launch of ChatGPT by OpenAI, a transformer-based chatbot…

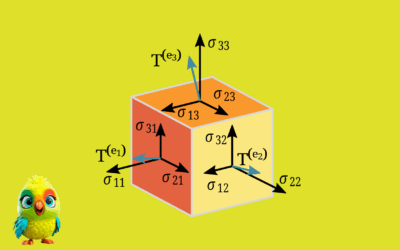

Understanding Tensors: A Comprehensive Guide with Mathematical Examples

Welcome to our mathematically rigorous exploration of tensors. This guide provides precise definitions, theoretical foundations, and worked examples ranging from elementary to advanced levels. All concepts are presented using proper mathematical notation with no reliance on programming languages. The Story of Tensors: From Curved Surfaces to Cosmic Equations Long ago, in the 19th century, a…

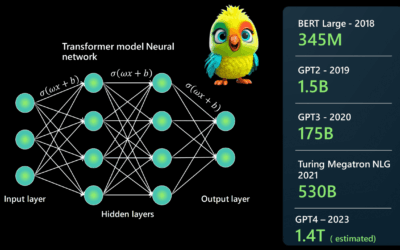

Everything You Need to Know to Build a Large Language Model (LLM) from Scratch: Architecture, Tokenization, Training & Deployment

What Are LLMs? LLMs are machine learning models trained on vast amounts of text data. They use transformer architectures, a neural network design introduced in the paper “Attention Is All You Need”. Transformers excel at capturing context and relationships within data, making them ideal for natural language tasks. 1. Architectural Types of Language Models (Expanded with…

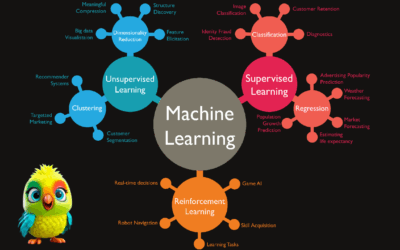

Every Model in Machine Learning (Supervised, Unsupervised, Regression) explained

What is artificial intelligence? Artificial intelligence is a field of science concerned with building computers and machines that can reason, learn, and act in such a way that would normally require human intelligence or that involves data whose scale exceeds what humans can analyze. AI is a large field that includes many disciplines including computer…

How do LLMs work from tokenization, embedding, QKV Activation Functions to output

Course Introduction: How Large Language Models (LLMs) Work What You Will Learn: The LLM Processing Pipeline In this course, you will learn how Large Language Models (LLMs) process text step by step, transforming raw input into intelligent predictions. Here’s a visual overview of the journey your words take through an LLM: Module Roadmap You will…

Tiny Agents in Python: Build an MCP-Powered AI Assistant in <100 Lines

Introduction to MCP and Tiny Agents The Model Context Protocol (MCP) is revolutionizing how we build AI applications by standardizing tool integration for LLMs. In this guide, I’ll show you how to create a fully functional Python agent that leverages MCP to dynamically discover and use tools – all in under 100 lines of code….

The Efficiency Revolution: How to Choose the Right-Sized AI Model for Your Needs

Executive Summary As AI adoption accelerates, a critical shift is occurring: organizations are moving from “bigger is better” to “right-sized is smarter.” Our comprehensive analysis of 9 leading models across climate, economic, and healthcare domains reveals: Smaller models (3B-32B parameters) can match or exceed larger models’ accuracy on specialized tasks while using 24x less energy Newer model…

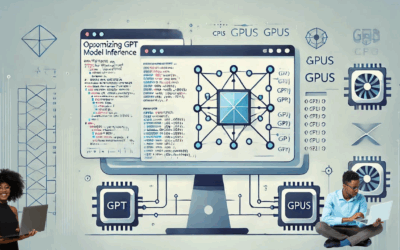

KV Caching Explained: A Deep Dive into Optimizing Transformer Inference

Introduction to KV Caching When large language models (LLMs) generate text autoregressively, they perform redundant computations by reprocessing the same tokens repeatedly. Key-Value (KV) Caching solves this by storing intermediate attention states, dramatically improving inference speed – often by 5x or more in practice. In this comprehensive guide, we’ll: Explain the transformer attention bottleneck Implement KV caching from scratch…

Implementing KV Cache from Scratch in nanoVLM: A 38% Speedup in Autoregressive Generation

Introduction Autoregressive language models generate text one token at a time. Each new prediction requires a full forward pass through all transformer layers, leading to redundant computations. For example, generating the next token in: [What, is, in,] → [the] requires recomputing attention over [What, is, in,] even though these tokens haven’t changed. KV Caching solves this inefficiency by…