ML & AI

Master Machine Learning & Artificial Intelligence: Unlock the Power of Data and Automation

Step into the world of Machine Learning (ML) and Artificial Intelligence (AI) with our in-depth courses designed for all skill levels. Learn the key concepts, algorithms, and techniques behind ML and AI, and how they’re transforming industries such as healthcare, finance, and technology. From supervised and unsupervised learning to deep learning and neural networks, our courses provide hands-on experience with the latest tools and frameworks. Whether you’re a beginner or an advanced professional, develop the expertise to build intelligent systems and drive innovation with ML & AI.

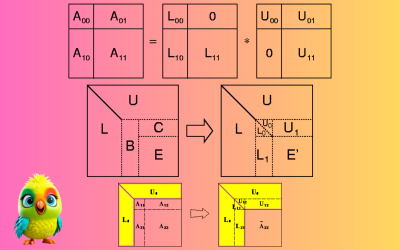

LU Decomposition Method Is A Quick, Easy, and Credible Way to Solve problem in Linear Equations

Introduction Solving systems of linear equations is a fundamental problem in mathematics, engineering, physics, and computer science. Among the various methods available, LU Decomposition stands out for its efficiency, simplicity, and numerical stability. In this blog, we’ll explore what LU Decomposition is, how it works, and why it’s a reliable method for solving linear equations. What…

Learn how to build an AI agent that answers questions based on a CSV dataset using Flowise.

Low-code tools have opened the doors for anyone — not just developers — to build smart, AI-powered systems. In this guide, I’ll walk you through how to build a fully functional AI data analyst agent using Flowise. The agent will be able to analyze a CSV dataset and answer questions about it using SQL —…

Learn How to Enhance Your Models in 5 Minutes with the Hugging Face Kernel Hub

The Kernel Hub is a game-changing resource that provides pre-optimized computation kernels for machine learning models. These kernels are meticulously tuned for specific hardware architectures and common ML operations, offering significant performance gains without requiring low-level coding expertise. Why Kernel Optimization Matters Hardware-Specific Tuning: Kernels are optimized for different GPUs (NVIDIA, AMD) and CPUs Operation-Specialized:…

The Ultimate Guide to Handling Missing Values in data preprocessing for machine learning

Missing values are a common issue in machine learning. This occurs when a particular variable lacks data points, resulting in incomplete information and potentially harming the accuracy and dependability of your models. It is essential to address missing values efficiently to ensure strong and impartial results in your machine-learning projects. In this article, we will…

Fine-Tuning LLMs How to Train a 12B-Parameter AI Art Model (FLUX.1-dev) on a Single Consumer GPU

Ever wanted to fine-tune a state-of-the-art AI art model like FLUX.1-dev but thought you needed expensive cloud GPUs? Think again. In this step-by-step guide, you’ll learn how to: ✔ Fine-tune FLUX.1-dev (12B parameters) on a single RTX 4090 (24GB VRAM) ✔ Reduce VRAM usage by 6x using QLoRA, gradient checkpointing, and 8-bit optimizers ✔ Achieve stunning style…

Matrices and Matrix Arithmetic Used for Machine Learning and Artificial Intelligence

Matrices are omnipresent in math and computer science, both theoretical and applied. They are often used as data structures, such as in graph theory. They are a computational workhorse in many AI fields, such as deep learning, computer vision and natural language processing. Why is that? Why would a rectangular array of numbers, with famously…

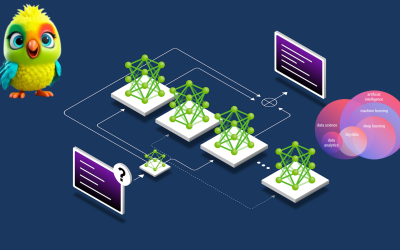

Mixture of Experts the new AI models approach by Scaling AI with Specialized Intelligence

Mixture of Experts (MoE) is a machine learning technique where multiple specialized models (experts) work together, with a gating network selecting the best expert for each input. In the race to build ever-larger and more capable AI systems, a new architecture is gaining traction: Mixture of Experts (MoE). Unlike traditional models that activate every neuron…

Implementing a Custom Website Chatbot From LLMs to Live Implementation For Users

The journey to today’s sophisticated chatbots began decades ago with simple rule-based systems. The field of natural language processing (NLP) has undergone several revolutions: 1. Early Systems (1960s-1990s): ELIZA (1966) and PARRY (1972) used pattern matching to simulate conversation, but had no real understanding. 2. Statistical NLP (1990s-2010s): Systems began using probabilistic models and machine…

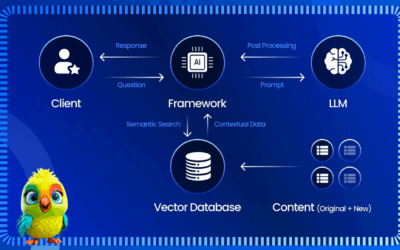

Retrieval-Augmented Generation (RAG) enhances LLM text generation using external knowledge

Retrieval-Augmented Generation (RAG) enhances LLM text generation by incorporating external knowledge sources, making responses more accurate, relevant, and up-to-date. RAG combines an information retrieval component with a text generation model, allowing the LLM to access and process information from external databases before generating text. This approach addresses challenges like domain knowledge gaps, factuality issues, and hallucinations often…

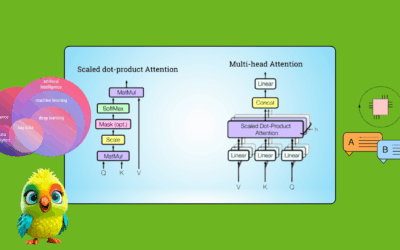

Understanding Transformers: The Mathematical Foundations of Large Language Models

In recent years, two major breakthroughs have revolutionized the field of Large Language Models (LLMs): 1. 2017: The publication of Google’s seminal paper, (https://arxiv.org/abs/1706.03762) by Vaswani et al., which introduced the Transformer architecture – a neural network that fundamentally changed Natural Language Processing (NLP). 2. 2022: The launch of ChatGPT by OpenAI, a transformer-based chatbot…