Understanding Transformers: The Mathematical Foundations of Large Language Models

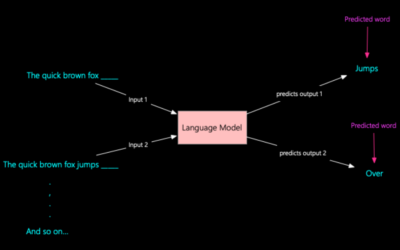

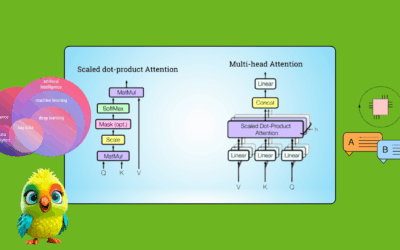

In recent years, two major breakthroughs have revolutionized the field of Large Language Models (LLMs): 1. 2017: The publication of Google’s seminal paper, (https://arxiv.org/abs/1706.03762) by Vaswani et al., which introduced the Transformer architecture – a neural network that fundamentally changed Natural Language Processing (NLP). 2. 2022: The launch of ChatGPT by OpenAI, a transformer-based chatbot…