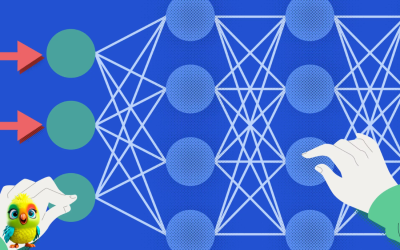

Transformer was first introduced in the seminal paper “Attention is All You Need”

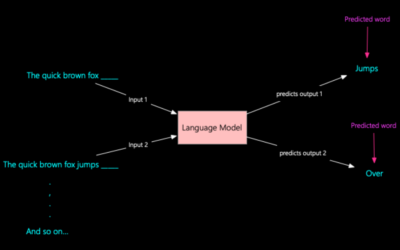

Transformer is a neural network architecture that has fundamentally changed the approach to Artificial Intelligence. Transformer was first introduced in the seminal paper “Attention is All You Need” in 2017 and has since become the go-to architecture for deep learning models, powering text-generative models like OpenAI’s GPT, Meta’s Llama, and Google’s Gemini. Beyond text, Transformer is also applied in audio generation, image…