Learn How to Enhance Your Models in 5 Minutes with the Hugging Face Kernel Hub

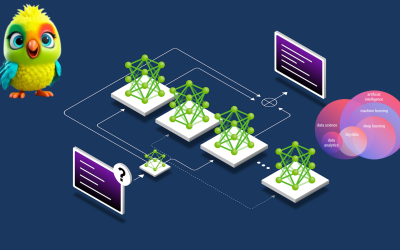

The Kernel Hub is a game-changing resource that provides pre-optimized computation kernels for machine learning models. These kernels are meticulously tuned for specific hardware architectures and common ML operations, offering significant performance gains without requiring low-level coding expertise. Why Kernel Optimization Matters Hardware-Specific Tuning: Kernels are optimized for different GPUs (NVIDIA, AMD) and CPUs Operation-Specialized:…