As a candidate, I’ve interviewed at a dozen big companies and startups. I’ve got offers for machine learning roles at companies including Google, NVIDIA, Snap, Netflix, Primer AI, and Snorkel AI. I’ve also been rejected at many other companies.

As an interviewer, I’ve been involved in designing and executing the hiring process at NVIDIA and Snorkel AI, having taken steps from cold emailing candidates whose work I love, screening resumes, doing exploratory and technical interviews, debating whether or not to hire a candidate, to trying to convince candidates to choose us over competitive offers.

As a friend and teacher, I’ve helped many friends and students prepare for their machine learning interviews at big companies and startups. I give them mock interviews and take notes of the process they went through as well as the questions they were asked.

I’ve also consulted several startups on their machine learning hiring pipelines. Hiring for machine learning roles turned out to be pretty difficult when you don’t already have a strong in-house machine learning team and process to help you evaluate candidates. As the use of machine learning in the industry is still pretty new, a lot of companies are still making it up as they go along, which doesn’t make it easier for candidates.

This book is the result of the collective wisdom of many people who have sat on both sides of the table and who have spent a lot of time thinking about the hiring process. It was written with candidates in mind, but hiring managers who saw the early drafts told me that they found it helpful to learn how other companies are hiring, and to rethink their own process.

The blog consists of two parts. The first part provides an overview of the machine learning interview process, what types of machine learning roles are available, what skills each role requires, what kinds of questions are often asked, and how to prepare for them. This part also explains the interviewers’ mindset and what kind of signals they look for.

The second part consists of over 200 knowledge questions, each noted with its level of difficulty — interviews for more senior roles should expect harder questions — that cover important concepts and common misconceptions in machine learning.

After you’ve finished this blog, you might want to checkout the 30 open-ended questions to test your ability to put together what you know to solve practical challenges. These questions test your problem-solving skills as well as the extent of your experiences in implementing and deploying machine learning models. Some companies call them machine learning systems design questions. Almost all companies I’ve talked to ask at least a question of this type in their interview process, and they are the questions that candidates often find to be the hardest.

“Machine learning systems design” is an intricate topic that merits its own book. To learn more about it, check out my course CS 329S: Machine learning systems design at Stanford.

This blog is not a replacement to machine learning textbooks nor a shortcut to game the interviews. It’s a tool to consolidate your existing theoretical and practical knowledge in machine learning. The questions in this blog can also help identify your blind/weak spots. Each topic is accompanied by resources that should help you strengthen your understanding of that topic.

Target audience

If you’ve picked up this blog because you’re interested in working with one of the key emerging technologies of the 2020s but not sure where to start, you’re in the right place. Whether you want to become an ML engineer, a platform engineer, a research scientist, or you want to do ML but don’t yet know the differences among those titles, I hope that this blog will give you some useful pointers.

This blog focuses more on roles involving machine learning production than research, not because I believe production is more important. As fewer and fewer companies can afford to pursue pure research whereas more and more companies want to adopt machine learning, there will be, and already are, vastly more roles involving production than research.

This blog was written with two main groups of candidates in mind:

-

- Recent graduates looking for their first full-time jobs.

-

- Software engineers and data scientists who want to transition into machine learning.

I imagine the majority of readers of this blog come from a computer science background. The second part of the blog, where the questions are, is fairly technical. However, as machine learning finds its use in more industries — healthcare, farming, trucking, fashion, you name it — the field needs more people with diverse interests. If you’re interested in machine learning but hesitant to pursue it because you don’t have an engineering degree, I strongly encourage you to explore it. This blog, especially the first part, might address some of your needs. After all, I only took an interest in matrix manipulation after working as a writer for almost a decade.

About the questions

The questions in this blog were selected out of thousands of questions, most have been asked in actual interviews for machine learning roles. You will find several questions that are technically incorrect or ambiguous. This is on purpose. Sometimes, interviewers ask these questions to see whether candidates will correct them, point out the edge cases, or ask for clarification. For these questions, the accompanying hints should help clarify the ambiguity or technical incorrectness.

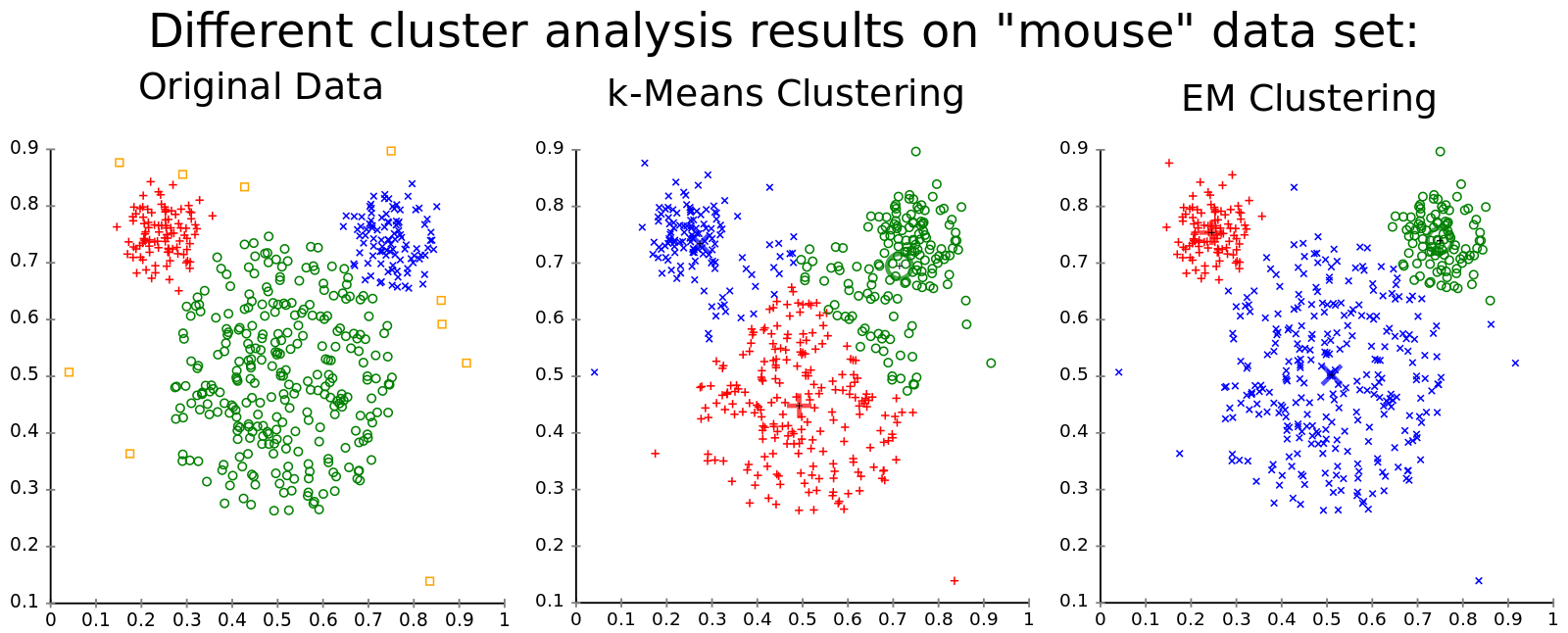

Machine learning is a tool, and to effectively use any tool, we should know how, why, or when to use it on top of knowing what it is. Because the “what” questions can be easily found online, and if something can be easily acquired, it isn’t worth testing for. This blog focuses on the “how”, “why”, and “when” questions. For example, instead of asking for the exact algorithm for K-means clustering, the question asks in what scenarios K-means doesn’t work. You don’t need to understand K-means to cite its definition, but you do to know when not to use it.

Still, this blog contains a small number of “what” questions. While they aren’t good interview questions, they are good for interview preparation.

About the answers

I started the blog with the naive optimism that I’d write the answers for every question in this blog. It turned out writing detailed answers to 300+ technical questions — while juggling a full-time job, a teaching gig, a raging pandemic with a couple of family members in the hospital — is a lot.

Given the slow progress I’ve been making, I’ve decided that publishing the draft then continuing writing/crowdsourcing the answers might be more productive. The first draft of the blog has the answers for about 10

Hiring is a process, and questions aren’t evaluated in isolation. Your answer to each question is evaluated as part of your performance during the entire process. A candidate who claims to work with computer vision and fails to answer a question about techniques typically used for computer vision tasks is going to be evaluated differently from a candidate who doesn’t work with computer vision at all. Interviewers often care more about your approach than the actual objective correctness of your answers.

Gaming the interview process

People often ask me: “Don’t you worry that candidates will just memorize the answers in this blog and game the system?”

First, I don’t encourage interviewers to ask the exact questions in this blog, but I hope this blog provides a framework for interviewers to distinguish good questions from bad ones.

Second, there’s nothing wrong with memorizing something as long as that memorization is useful. The problem begins when memorization is impractical — candidates memorize something to pass the interviews and never use that knowledge again, or don’t know how to use it in real situations.

For this book, I aimed to include only concepts that I and many of my helpful colleagues deemed practical. For every concept, I ask: “Where in the real world is it used?” If I can’t find a good answer after extensive research, the concept is discarded. For example, while I chose to include questions about inner product and outer product, I left out cross product. You can see the list of discarded questions in the list of “Bad questions” on the book’s GitHub repository. This is far from a foolproof process. As the field expands, concepts that aren’t applicable now might be all that AI researchers ever talk about in 2030.

Interviews are stressful, even more so when they are for your dream job. As someone who has been in your shoes, and might again be in your shoes in the future, I just want to tell you that it doesn’t have to be so bad. Each interview is a learning experience. An offer is great, but a rejection isn’t necessarily a bad thing and is never the end of the world.

There are many random variables that influence the outcome of an interview: the questions asked, other candidates the interviewer has seen before you, after you, the interviewer’s expectation, even the interviewer’s mood. It is, in no way, a reflection of your ability or your self-worth.

I was pretty much rejected for every job I applied to when I began this process. Now I just get rejected at a less frequent rate. Keep on learning and improving. You’ve got this!

Part I. Overview

Chapter 1. Machine learning jobs

Before embarking on a journey to find a job, it might be helpful to know what types of jobs there are. These jobs vary wildly from company to company based on their focus, customer profile, and stage, so in the second part of this chapter, we will go over different types of companies.

1.1 Different machine learning roles

Some of the roles we’ll look into in this chapter:

-

- Machine learning engineer

-

- Data scientist

-

- ML/AI platform engineer

-

- ML/AI infrastructure engineer

-

- Framework engineer

-

- Solution architect

-

- Developer advocate

-

- Solutions engineer

-

- Applications engineer

-

- Applied research scientist

-

- Research engineer

-

- Research scientist

1.1.1 Working in research vs. working in production

I use research vs. production instead of academia vs. industry because even though academia is mostly concerned with research, research isn’t mostly done in academia. In fact, ML research nowadays is spearheaded by big corporations. See 1.1.2 Research for more details.

The first question you might want to figure out is whether you want to work in research or in production. They have very different job descriptions, requirements, hiring processes, and compensations.

The goal of research is to find the answers to fundamental questions and expand the body of theoretical knowledge. A research project usually involves using scientific methods to validate whether a hypothesis or a theory is true, without worrying about the practicality of the results.

The goal of production is to create or enhance a product. A product can be a good (e.g. a car), a service (e.g. ride-sharing service), a process (e.g. detecting whether a transaction is fraudulent), or a business insight (e.g. “to maximize profit we should increase our price 10

A research project doesn’t need users, but a product does. For a product to be useful, it has many more requirements other than just performance, such as inference latency, interpretability (both to users and to developers), fairness (to all subgroups of users), adaptability to changing environment. The majority of a production team’s job might be to ensure these other requirements.

The given definitions above are, of course, handwavy at best. What’s research and what’s production in machine learning remain a heated topic of debate as of 20213. One reason for the ambiguity is that novel ideas with obvious usefulness tend to attract more researchers, and solving practical problems often requires coming up with novel ideas.

For more differences between machine learning in research and in production, see Stanford’s CS 329S, lecture 1: Understanding machine learning production.

As a candidate, if you’re unfamiliar with both and not sure whether you want to find roles in research or in production, the latter might be the smoother path. There are many more roles involving production than roles involving research.

3: One example is the argument whether GPT-3 is research. Many researchers were upset when Language Models are Few-Shot Learners (OpenAI, 2020) was awarded the best paper at NeurIPS because they didn’t consider it research.

1.1.2 Research

As the research community takes the “bigger, better” approach, new models often require a massive amount of data and tens of millions of dollars in computing. The estimated market cost to train DeepMind’s AlphaStar and OpenAI’s GPT-3 is in the tens of millions each45. Most companies and academic institutions can’t afford to pursue pure research.

Outside academic institutions, there are only a handful of machine learning research labs in the world. Most of these labs are funded by corporations with deep pockets such as Alphabet (Google Brain, DeepMind), Microsoft, Facebook, Tencent6. You can find these labs by browsing the affiliations of published papers at major academic conferences including NeurIPS, ICLR, ICML, CVPR, ACL. In 2019 and 2020, Alphabet accounts for over 10

Tip Not all these industry labs publish papers — companies like Apple and Tesla are notoriously secretive. Even if an industry lab publishes, it might only publish a portion of its research. Before joining an industry lab, you might want to consider its publishing policy. Joining a secretive lab might mean that you won’t be able to explain to other people what you’ve been working on or what you’re capable of doing.

4: State of AI Report 2019 by Nathan Benaich and Ian Hogarth.

5: OpenAI’s massive GPT-3 model is impressive, but size isn’t everything by VentureBeat.

6: In an earlier draft of this book, I included Uber AI and Element AI. However, Uber AI research lab was laid off in April 2020, and Element AI was sold for cheap in November 2020.

1.1.2.1 Research vs. applied research

At some companies, you might encounter roles involving applied research. Applied research is somewhere between research and production, but much closer to research than production. Applied research involves finding solutions to practical problems, but doesn’t involve implementing those solutions in actual production environments.

Applied researchers are researchers. They come up with novel hypotheses and theses as well as validate them. However, since their hypotheses and theses deal with practical problems, they need to understand these problems as well. In industry lingo, they need to have subject matter expertise.

In machine learning, an example of a research project would be to develop an unsupervised transfer learning method for computer vision, experiment on a standard academic dataset. An example of an applied research project would be to develop techniques to make that new method work on a real-world problem in a specific industry, e.g. healthcare. People working on this applied research project will, therefore, need to have expertise in both machine learning and healthcare.

1.1.2.2 Research scientist vs. research engineer

There’s much confusion about the role of a research engineer. This is a rare role, often seen at major research labs in the industry. Loosely speaking, if the role of a research scientist is to come up with original ideas, the role of a research engineer is to use their engineering skills to set up and run experiments for these ideas. The research scientist role typically requires a Ph.D. and/or first author papers at top-tier conferences. The research engineer role doesn’t, though publishing papers always helps.

For some teams, there’s no difference between a research scientist and a research engineer. Research scientists should, first and foremost, be engineers. Both research scientists and engineers come up with ideas and implement those ideas. A researcher might also act as an advisor guiding research engineers in their own research. It’s not uncommon to see research scientists and research engineers be equal contributors to papers7. The different job titles are mainly a product of bureaucracy — research scientists are supposed to have bigger academic clout and are often better paid than research engineers.

Startups, to attract talents, might be more generous with the job titles. A candidate told me he chose a startup over a FAAAM company because the startup gave him the title of a research scientist, while that big company gave him the title of a research engineer.

Akihiro Matsukawa gave an interesting perspective on the difference between the research scientist and the research engineer with his post: Research Engineering FAQs.

7: Notable examples include “Attention Is All You Need” from Google and “Language Models are Unsupervised Multitask Learners” from OpenAI.

1.1.3 Production

As machine learning finds increasing use in virtually every industry, there’s a growing need for people to bring machine learning models into production. In this section, we will first cover the production cycle for machine learning, the skills needed for each step, and the distinctions of several roles that often confuse the candidates I’ve talked to.

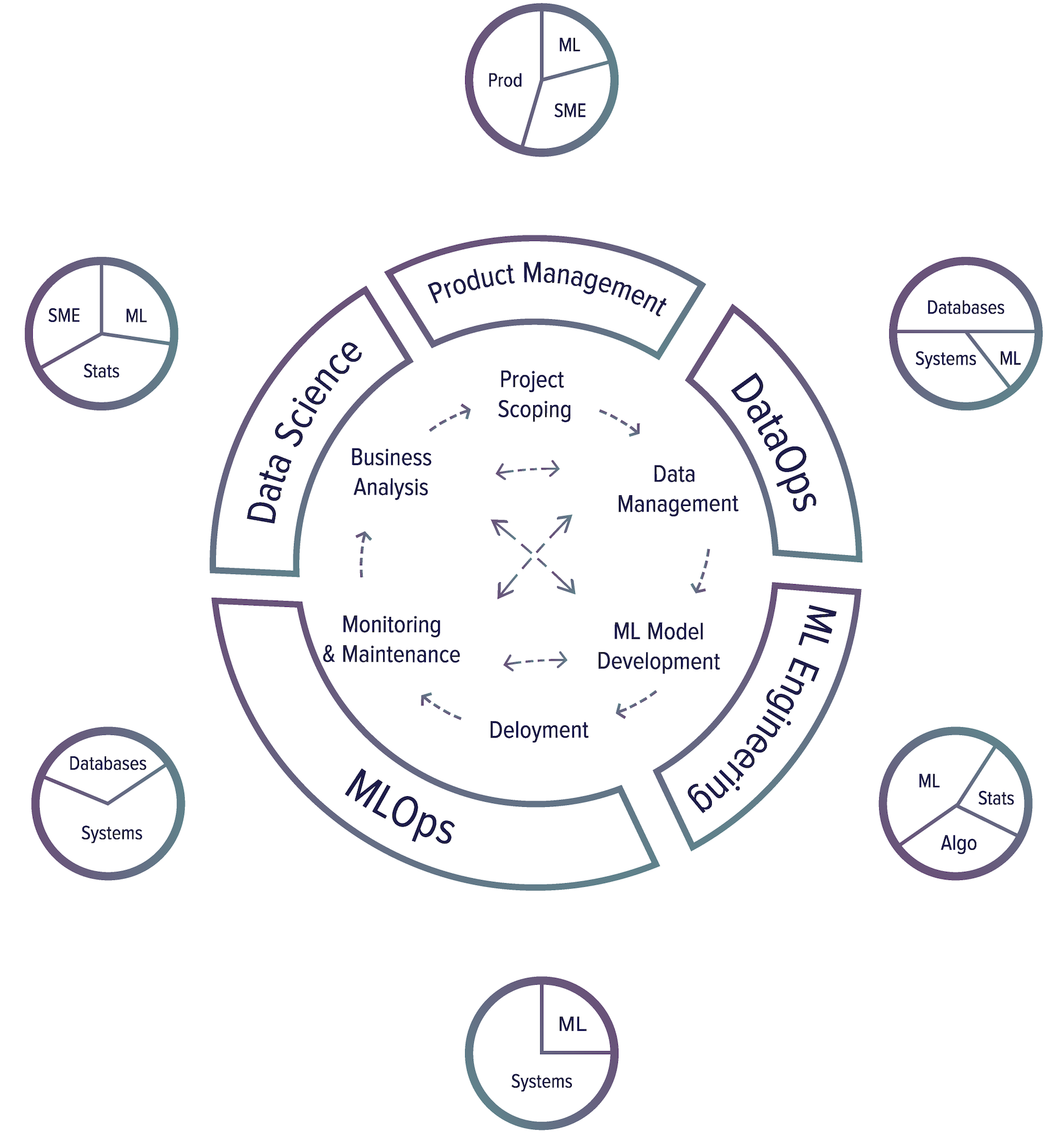

1.1.3.1 Production cycle

To understand different roles involving machine learning in production, let’s first explore different steps in a production cycle. There are six major steps in a production cycle.

⚠ On the main skills listed at each step ⚠ The main skills listed at each step below will upset many people, as any attempt to simplify a complex, nuanced topic into a few sentences would. This portion should only be used as a reference to get a sense of the skill sets needed for different ML-related jobs.

-

- Project scopingA project starts with scoping the project, laying out goals & objectives, constraints, and evaluation criteria. Stakeholders should be identified and involved. Resources should be estimated and allocated.Main skills needed: product management, subject matter expertise to understand problems, some ML knowledge to know what ML can and can’t solve.

-

- Data managementData used and generated by ML systems can be large and diverse, which requires scalable infrastructure to process and access it fast and reliably. Data management covers data sources, data formats, data processing, data control, data storage, etc.Main skills needed: databases/query engines to know how to store/retrieve/process data, systems engineering to implement distributed systems to process large amounts of data, minimal ML knowledge to optimize the organization data for ML access patterns would be helpful, but not required.

-

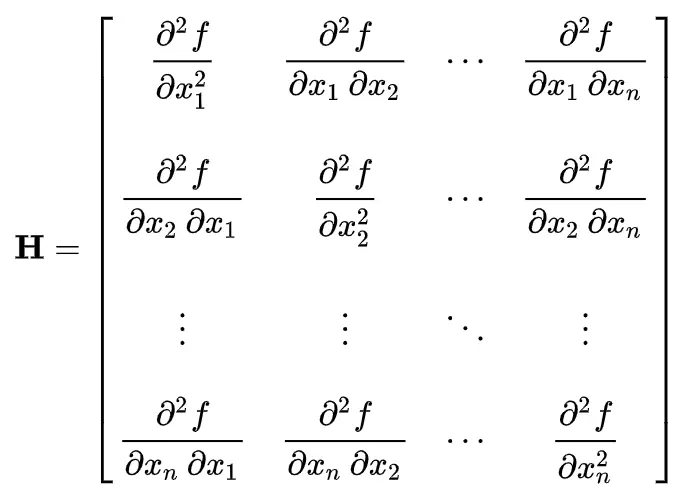

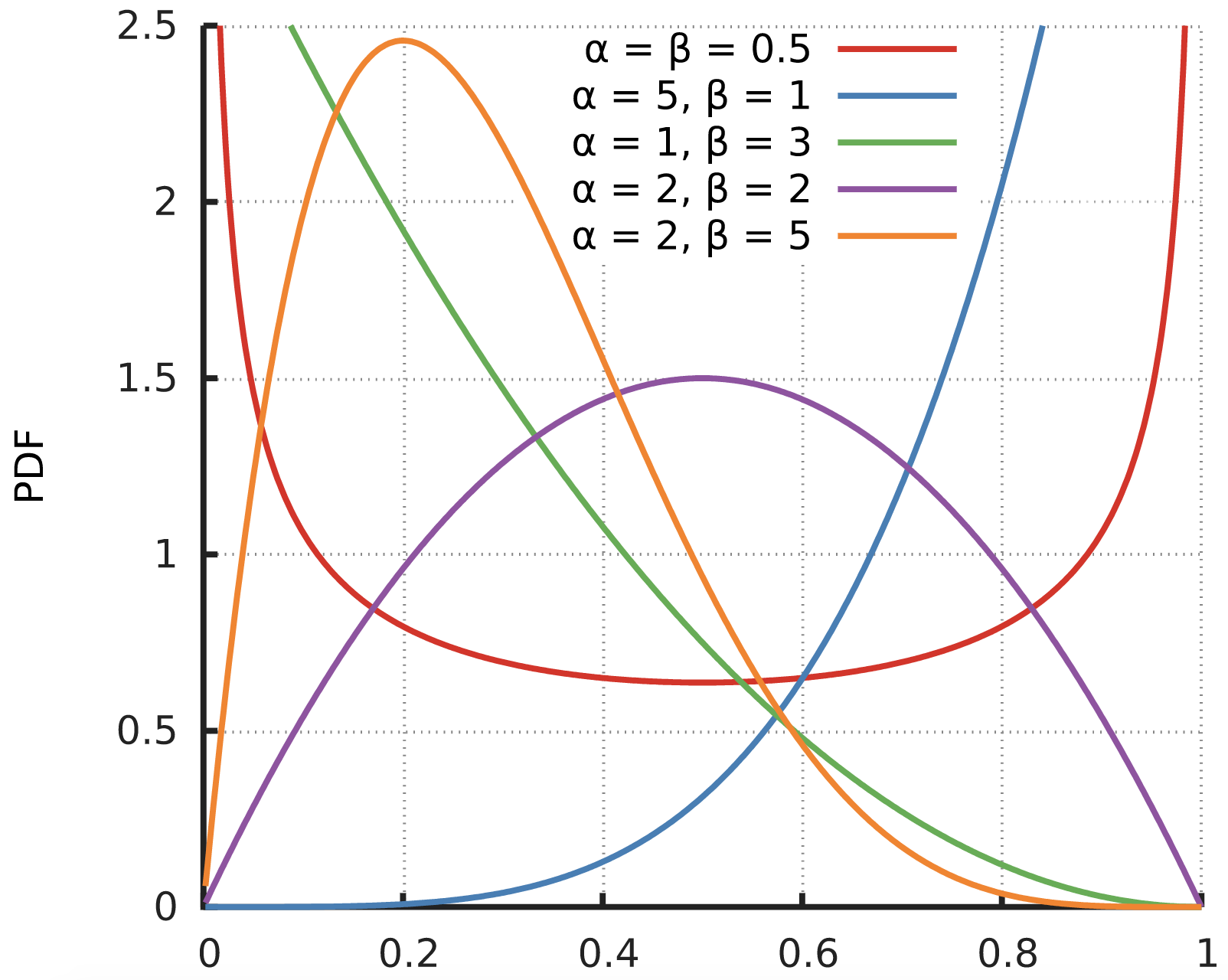

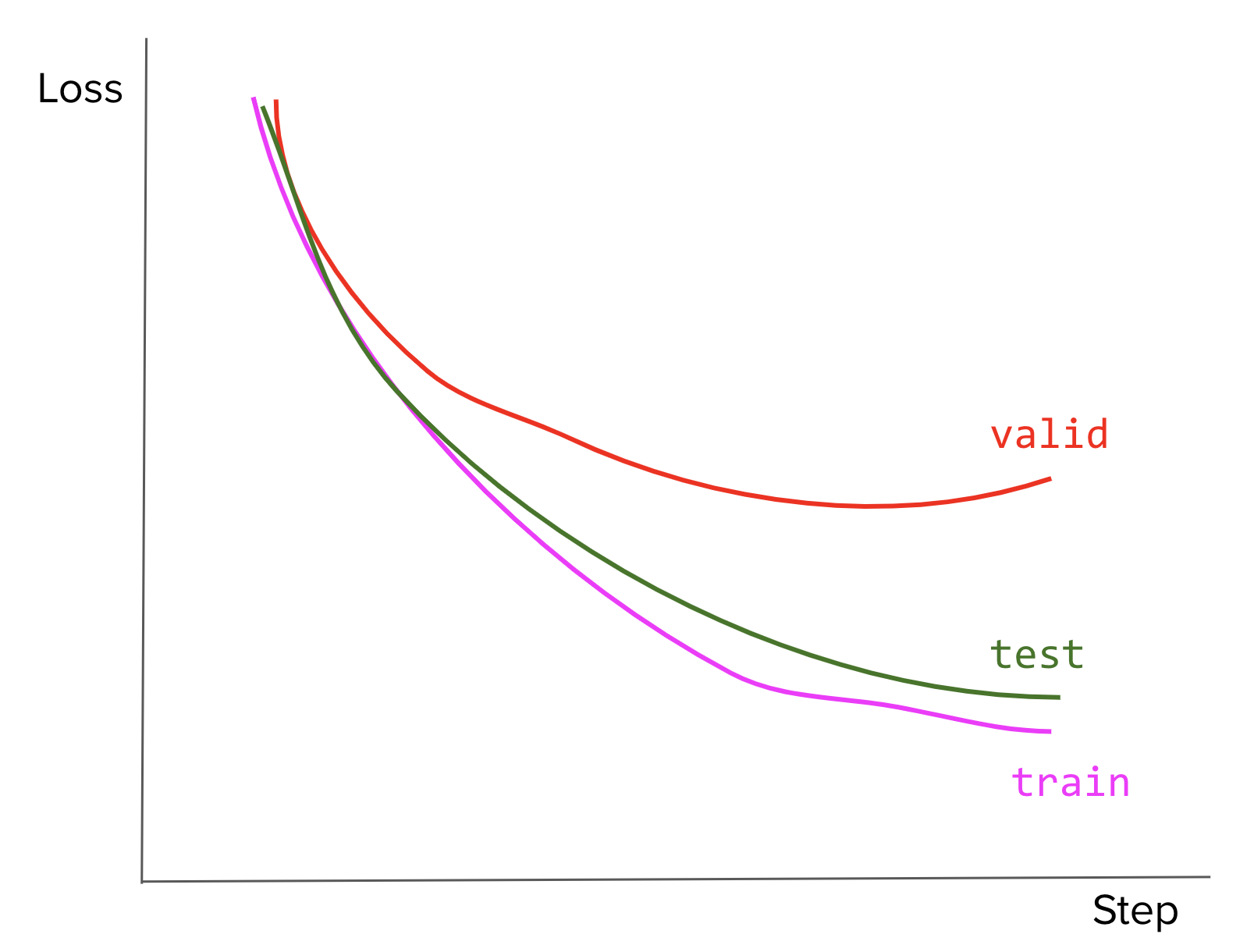

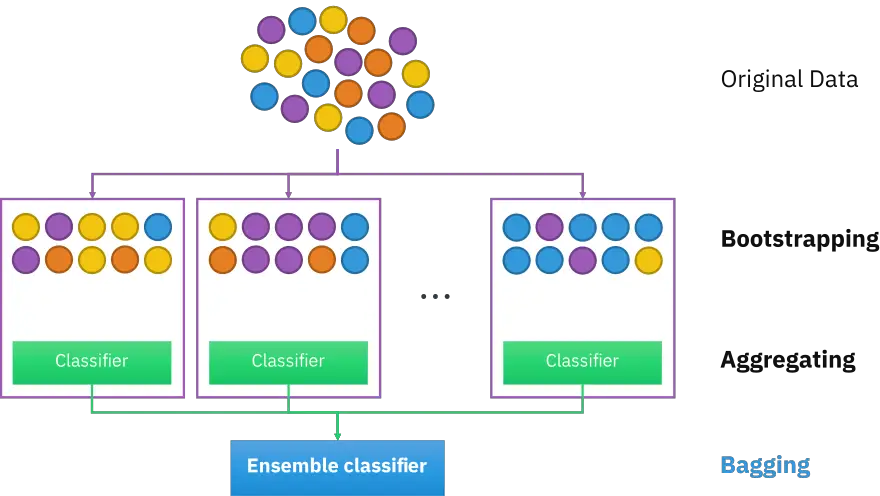

- ML model developmentFrom raw data, you need to create training datasets and possibly label them, then generate features, train models, optimize models, and evaluate them. This is the stage that requires the most ML knowledge and is most often covered in ML courses.Main skills needed: This is the part of the process that requires the most amount of ML knowledge, statistics and probability to understand the data and evaluate models. Since feature engineering and model development require writing code, this part needs coding skills, especially in algorithms and data structures.

-

- DeploymentAfter a model is developed, it needs to be made accessible to users.Main skills needed: Bringing an ML model to users is largely an infrastructure problem: how to set up your infrastructures or help your customers set up their infrastructures to run your ML application. These applications are often data-, memory-, and compute-intensive. It might also require ML to compress ML models and optimize inference latency unless you can push these to the previous step of the process.

-

- Monitoring and maintenanceOnce in production, models need to be monitored for performance decay and maintained/updated to be adaptive to changing environments and changing requirements.Main skills needed: Monitoring and maintenance is also an infrastructure problem that requires computer systems knowledge. Monitoring often requires generating and tracking a large amount of system-generated data (e.g. logs), and managing this data requires an understanding of the data pipeline.

-

- Business analysisModel performance needs to be evaluated against business goals and analyzed to generate business insights. These insights can then be used to eliminate unproductive projects or scope out new projects.Main skills needed: This part of the process requires ML knowledge to interpret ML model’s outputs and behavior, in-depth statistics and probability knowledge to extract insights from data, as well as subject matter expertise to map these insights to the practical problems the ML models are supposed to solve.

Skill annotation

-

- Systems: system engineering e.g. to building distributed systems, container deployment.

-

- Databases: data management, storage, processing, databases, query engines. This is closely related to Systems since you might need to build distributed systems to process large amounts of data.

-

- ML: linear algebras, ML algorithms, etc.

-

- Algo: algorithmic coding

-

- Stats: probability, statistics

-

- SME: subjective matter expertise

-

- Prod: product management

The most successful approach to ML production I’ve seen in the industry is iterative and incremental development. It means that you can’t really be done with a step, move to the next, and never come back to it again. There’s a lot of back and forth among various steps.

Here is one common workflow that you might encounter when building an ML model to predict whether an ad should be shown when users enter a search query8.

8: Praying and crying not featured but present through the entire process.

-

- Choose a metric to optimize. For example, you might want to optimize for impressions — the number of times an ad is shown.

-

- Collect data and obtain labels.

-

- Engineer features.

-

- Train models.

-

- During error analysis, you realize that errors are caused by wrong labels, so you relabel data.

-

- Train model again.

-

- During error analysis, you realize that your model always predicts that an ad shouldn’t be shown, and the reason is that 99.99

-

- Train model again.

-

- The model performs well on your existing test data, which is by now two months ago. But it performs poorly on the test data from yesterday. Your model has degraded, so you need to collect more recent data.

-

- Train model again.

-

- Deploy model.

-

- The model seems to be performing well but then the business people come knocking on your door asking why the revenue is decreasing. It turns out the ads are being shown but few people click on them. So you want to change your model to optimize for clickthrough rate instead.

-

- Start over.

There are many people who will work on an ML project in production — ML engineers, data scientists, DevOps engineers, subject matter experts (SMEs). They might come from very different backgrounds, with very different languages and tools, and they should all be able to work on the system productively. Cross-functional communication and collaboration are crucial.

Tip As a candidate, understanding this production cycle is important. First, it gives you an idea of what work needs to be done to bring a model to the real world and the possible roles available. Second, it helps you avoid ML projects that are bound to fail when the organizations behind them don’t set them up in a way that allows iterative development and cross-functional communication.

1.1.3.2 Machine learning engineer vs. software engineer

ML engineering is considered a subfield of software engineering. In most organizations, the hiring process for MLEs is spun out of their existing SWE hiring process. Some organizations might swap out a few SWE questions for ML-specific questions. Some just add an interview specially focused on ML on top of their existing interview process for SWE, making their MLE process a bit longer than their SWE process.

Overall, MLE candidates are expected to know how to code and be familiar with software engineering tools. Many traditional SWE tools can be used to develop and deploy ML applications.

In the early days of ML adoption, when companies had little understanding of what ML production entailed, many used to expect MLE candidates to be both stellar software engineers and stellar ML researchers. However, finding a candidate fitting that profile turned out to be difficult, and many companies had relaxed their ML criteria. In fact, several hiring managers have told me that they’d rather hire people who are great engineers but don’t know much ML because it’s easier for great engineers to pick up ML than for ML experts to pick up good engineering practices.

Tip If you’re a candidate trying to decide between software engineering and ML, choose engineering.

1.1.3.3 Machine learning engineer vs. data scientist

ML engineers might spend most of their time wrangling and understanding data. This leads to the question: how is a data scientist different from an ML engineer?

There are three reasons for much overlap between the role of a data scientist and the role of an ML engineer.

First, according to Wikipedia, “data science is a multidisciplinary field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from structured and unstructured data.” Since machine learning models learn from data, machine learning is part of data science.

Second, traditionally, companies have data science teams to generate business insights from data. When interests in ML were revived in the early 2010s, companies started looking into using ML. Before making a significant investment in starting full-fledged ML teams, companies might want to start with small ML projects to see if ML can add value. A natural candidate for this exploration is the team that is already working with the data: the data science team.

Third, many tasks of the data science teams, including demand forecasting, can be done using ML models. This is also how most data scientists transition into ML roles.

However, there are many differences between ML engineering and data science. The goal of data science is to generate business insights, whereas the goal of ML engineering is to turn data into products. This means that data scientists tend to be better statisticians, and ML engineers tend to be better engineers. ML engineers definitely need to know ML algorithms, whereas many data scientists can do their jobs without ever touching ML.

As a company’s adoption of ML matures, it might want to have a specialized ML engineering team. However, with an increasing number of prebuilt and pretrained models that can work off-the-shelf, it’s possible that developing ML models will require less ML knowledge, and ML engineering and data science will be even more unified.

1.1.3.4 Other technical roles in ML production

There are many other technical roles in the ML ecosystem. Many of them don’t require ML knowledge at all, e.g. you can build tools and infrastructures for ML without having to know what a neural network is (though knowing might help with your job). Examples include framework engineers at NVIDIA who work on CUDA optimization, people who work on TensorFlow at Google, or AWS platform engineers at Amazon.

ML infrastructure engineer, ML platform engineer

Because ML is resource-intensive, it relies on infrastructures that scale. Companies with mature ML pipelines often have infrastructure teams to help them build out the infrastructure for ML. Valuable skills for ML infrastructure/platform engineers include familiarity with parallelism, distributed computing, and low-level optimization.

These skills are hard to learn and take time to master, so companies prefer hiring engineers who are already skillful in this and train them in ML. If you are a rare breed that knows both systems and ML, you’ll be in demand.

ML accelerator/hardware engineer

Hardware is a major bottleneck for ML. Many ML algorithms are constrained by processors not being able to do computation fast enough, not having enough memory to store data/models and load them into memory, not being cheap enough to run experiments at scale, not having enough power to run applications on device.

There has been an explosion of companies that focus on building hardware both for training and serving ML models, both for cloud computing and edge computing. These hardware companies need people with ML expertise to guide their processor development process: to decide what ML models to focus on, then implement, optimize, and benchmark these models on their hardware. More and more hardware companies are also looking into using ML algorithms to improve their chip design process1112.

ML solutions architect

This role is often seen at companies that provide services and/or products to other companies that use ML. Because each company has its own use cases and unique requirements, this role involves working with existing or potential customers to figure out whether your service and/or product can help with their use case and if yes, how.

Developer advocate, developer programs engineer

You might have seen developer relationship (devrel) engineer roles such as developer advocate and developer programs engineer for ML. These roles bridge communication between people who build ML products and developers who use these products. The exact responsibilities vary from company to company, role to role, but you can expect them to be some combination of being the first users of ML products, writing tutorials, giving talks, collecting and addressing feedback from the community. Products like TensorFlow and AWS owe part of their popularity to the tireless work of their excellent devrel engineers.

Previously, these roles were only seen at major companies. However, as many machine learning startups now follow the open-core business model — open-sourcing the core or a feature-limited version of their products while offering commercial versions as proprietary software — these startups need to build and maintain good relationships with the developer community, devrel roles are crucial to their success. These roles are usually very hard to fill, as it’s rare to find great engineers who also have great communication skills. If you’re an engineer interested in more interaction with the community, you might want to consider this option.

11: Chip Design with Deep Reinforcement Learning (Google AI blog, 2020)

12: Accelerating Chip Design with Machine Learning (NVIDIA research blog, 2020)

1.1.3.5 Understanding roles and titles

While role definitions are useful for career orientation, a role definition poorly reflects what you do on the job. Two people with the same title on the same team might do very different things. Two people doing similar things at different companies might have different titles. In 2018, Lyft decided to rename their role of “Data Analyst” to “Data Scientist”, and “Data Scientist” to “Research Scientist”13, a move likely motivated by the job market’s demands, which shows how interchangeable roles are.

Tip When unsure what the job entails, ask. Here are some questions that might help you understand the scope of a role you’re applying for.

- How much of the job involves developing ML models?

- How much of the job involves data exploration and data wrangling? What are the characteristics of the data you’d have to work with, e.g. size, format?

- How much of the job involves DevOps?

- Does the job involve working with clients/customers? If yes, what kind of clients/customers? How many would you need to talk to? How often?

- Does the job involve reading and/or writing research papers?

- What are some of the tools that the team can’t work without?

In this book, I use the term “machine learning engineer” as an umbrella term to include research engineer, devrel engineer, framework engineer, data scientist, and the generic ML engineer.

Resources

- What machine learning role is right for you? by Josh Tobin, Full Stack Deep Learning Bootcamp 2019.

- Data science is different now by Vicki Boykis, 2019.

- The two sides of Getting a Job as a Data Scientist by Favio Vázquez, 2018.

- Goals and different roles in the Data Science platform at Netflix by Julie Pitt, live doc.

- Unpopular Opinion – Data Scientists Should Be More End-to-End by Eugene Yan, 2020.

13: What’s in a name? The semantics of Science at Lyft by Nicholas Chamandy (Lyft Engineering blog, 2018)

1.2 Types of companies

Different types of companies offer different roles, require different skills, and need to be evaluated using different criteria.

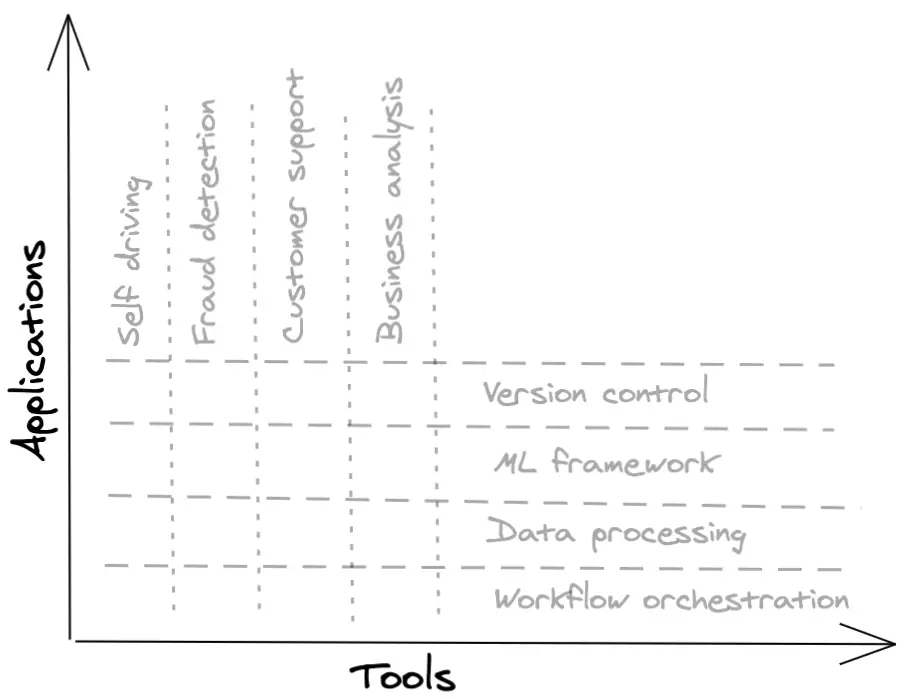

1.2.1 Applications companies vs. tooling companies

Personal story

After NVIDIA, I wanted to join an early-stage startup. I was considering two AI startups that were similar on the surface. Both had just raised seed rounds. Both had about 10 employees, most of these were engineers, and were ready for hypergrowth.

Startup A already had three customers, had just hired its first salesperson, and was ready to hire more salespeople to aggressively sell. Startup B had two customers, who they called design partners, and had no plan yet of hiring salespeople. I liked the work at startup B more but thought that startup A had a better sales prospect than startup B, and for early-stage startups, sales are essential for survival.

When I told this dilemma to a friend, who had invested in companies similar to both A and B, he pointed out to me that I forgot to consider the key difference between these two startups: A was an applications company while B was a tooling company.

Applications companies provide applications to solve a specific business problem such as business analytics or fraud detection. Tooling companies create tools to help companies build their own applications. Examples of tools are TensorFlow, Sparks, Airflow, Kubernetes.

In industry lingo, an application is said to target a vertical, whereas a tool targets a horizontal.

Applications are likely made to be used by subject matter experts who don’t want to be cumbered with engineering aspects (e.g. bankers who want applications for fraud detection or customer representatives who want applications to classify customer tickets). Tools are likely made to be used by developers. Some are explicitly known as devtools.

In the beginning, it’s easier to sell an application because it’s easier to see the immediate impact of an application and there’s less overhead for adopting an application. For example, you can tell a company that you can detect fraudulent transactions with 10

For a company to adopt a tool, there’s a lot of engineering overhead. They might have to swap out their existing tool, integrate the new tool with the rest of their infrastructure, retrain their staff or replace their staff. Many companies want to wait until a tool proves its usefulness and stability to a large number of companies first before adopting it.

However, for tooling companies, selling becomes a lot easier later on. Once your tool has reached a critical mass with a sufficient number of engineers who are proficient in it and prefer it, other companies might just become a user without you having to sell it. However, it’s really, really hard to get to that critical mass for new tools, and therefore, in general, tooling companies have higher risks than applications.

After talking to my friend, I realized that it’s normal for a company like A to have more customers than a company like B early on. But it doesn’t mean that A has a better sales prospect. In fact, having two large companies as design partners is a really good sign for B.

A year later, both companies acquired a similar number of customers and grew to have around 30 employees, but more than half of company A are in sales whereas 80

This new understanding helped me narrow down my choices. Because I preferred building tools for developers and wanted to work for an engineering-first instead of a sales-first organization, the decision became much easier.

⚠ Ambiguity ⚠ Whether a company is an application company or a tooling company might just be a go-to-market strategy. For example, you have a new tool that can address use cases that companies aren’t aware of yet, and you know it’d be hard to convince companies to make significant changes to their existing infrastructures for uncertain use cases. So you come up with a compelling use case that can’t be done without your tool, build an application around that use case, and sell that application instead. Once customers are aware of the usefulness of your tool, you switch to selling the tool directly.

Tip If you’re unsure whether the role involves working with an application or a tool, here are a few questions you may ask.

- Who are the main users of your product?

- What are the use cases you’re targeting?

- How many people does your company have? How many are engineers? How many are in sales?

1.2.2 Enterprise vs. consumer products

Another important distinction is between companies that build enterprise products (B2B – business to business) and companies that build customer products (B2C – business to consumer).

B2B companies build products for organizations. Examples of enterprise products are Customer relationship management (CRM) software, project management tools, database management systems, cloud hosting services, etc.

B2C companies build products for individuals. Examples of consumer products are social networks, search engines, ride-sharing services, health trackers, etc.

Many companies do both — their products can be used by individuals but they also offer plans for enterprise users. For example, Google Drive can be used by anyone but they also have Google Drive for Enterprise.

Even if a B2C company doesn’t create products for enterprises directly, they might still need to sell to enterprises. For example, Facebook’s main product is used by individuals but they sell ads to enterprises. Some might argue that this makes Facebook users products, as famously quipped: “If you’re not paying for it, you’re not the customer; you’re the product being sold.14”

These two types of companies have different sales strategies and engineering requirements. Consumer products tend to rely on viral marketing (e.g. invite your friends and get your next order for free) to reach a large number of users. Selling enterprise products tends to require selling to each user separately.

Enterprise companies usually have the role of solutions architect and its variances (solutions engineer, enterprise architect) to work with enterprise customers to figure out how to use the tool for their use cases.

Tip Since these two types of companies have different business models, they need to be evaluated differently when you consider joining them. For enterprise products, you might want to ask:

- How many customers do they have? What’s the customer growth rate (e.g. do they sign on a customer every month)?

- How long is their sales cycle (e.g. how long it usually takes them from talking to a potential customer to closing the contract)?

- How does their pricing structure work?

For consumer products, you might want to ask:

- How hard is it to integrate their product with their customers’ systems?

- How many active users do they have? What’s their user growth rate?

- How much does it cost to acquire a user? This is extremely important since the cost of user acquisition has been hailed as a startup killer15.

- Do users pay to use the product? If not, how are they going to make money?

- What privacy measures do they take when handling users’ data? E.g. you don’t want to work for the next Cambridge Analytica.

1.2.3 Startups or big companies

This is a question that I often hear from people early in their careers, and a question that can prompt heated discussions. I’ve worked at both big companies and startups, and my impressions were pretty much aligned with what is often said of the trade-off between big company stability and startup high impact (and high risk).

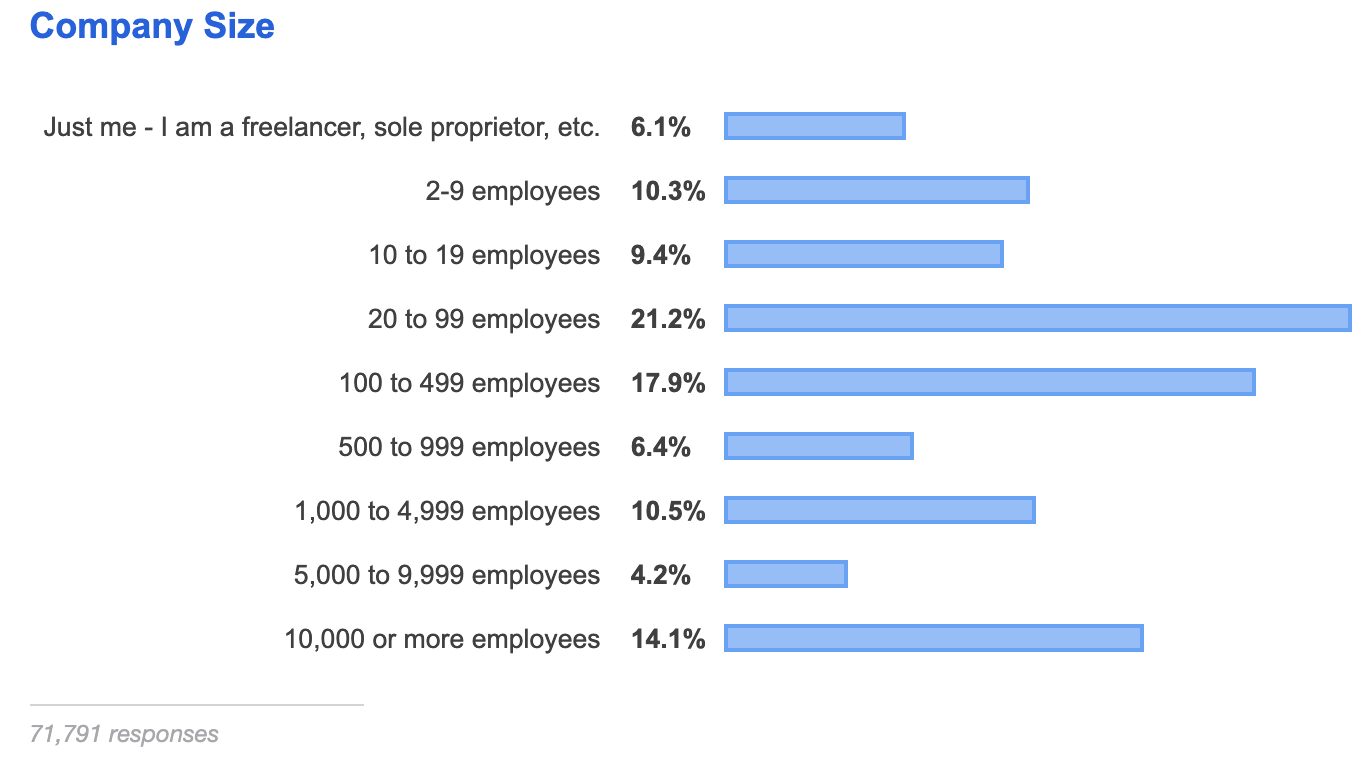

Statistically speaking, software engineers are more likely to work for a big company than a small startup. Even though there are more small companies than large corporations, large corporations employ more people. According to StackOver Developer Survey 2019, more than half of the 71K respondents worked for a company of at least 100 employees.

I couldn’t find a survey for ML specific roles, so I asked on Twitter and found similar results. This means that an average MLE most likely works for a company of at least 100 employees.

Tip A piece of advice I often hear and read in career books is that one should join a big company after graduation. The reasons given are:

- Big companies give you brand names you can put on your resume and benefit from for the rest of your life.

- Big companies often have standardized tech stack and good engineering practices.

- Major tech companies offer good compensation packages. Working for them even briefly will allow you to save money to pursue riskier ventures in the future.

- It’s good to learn how a big company operates since the small company that you later join or start might eventually grow big.

- You’ll know what it’s like to work for a big company so you’ll never again have to wonder.

- Most startups are bad startups. Working at a big company for a while will better equip you with technical skills and experience to differentiate a good startup from a bad one.

If you want to maximize your future career options, spending a year, or even just an internship, at a big company, is not a bad strategy. Whether you choose to join a startup or a big company, I hope that you get a chance to experience both environments and learn the very different sets of skills that they teach. You don’t know what you like until you’ve tried it. You might join a big company and realize you never want to join another big company again, or you might join a startup and realize you can’t live without the stability big companies give you. And if you believe that you’re offered a once-in-a-lifetime opportunity, take it, whether it’s at a big company or a startup.

- For immigrants, big companies might be the only option since small companies can’t afford to sponsor visas.

Personal story After graduation, I joined NVIDIA, not because it was a big company, but because I was excited about the opportunity to be part of a brand new team working on challenging projects. Looking back, I realized the brand name of NVIDIA helped my work to be taken seriously. Being an unknown employee at an unknown company would have thrown me even further into obscurity. I stayed at NVIDIA for a year and a half then joined a startup. I wanted a fast-moving environment with a steep learning curve, and I wasn’t disappointed.

Tip for engineers early in your careers: Know what you’re optimizing for With each career decision, be mindful of what you’re optimizing for so that you can get closer to your eventual goal. Some of the goals you can optimize for are:

- Money now: some people need or want immediate money, e.g. to pay off debts or to prepare for an economic downturn that they believe will happen in the near future. They might interview with multiple companies and go for the highest bidder. There’s nothing wrong with that.

- Money in the future: some are more concerned with being able to make a lot of money in the future. They might choose to pursue a Ph.D. that pays next to nothing but will help them get a highly paid job later.

- Impact: some focus on making an impact. You might work for a startup that allows you to make decisions that affect millions of users or work for a non-profit organization that changes people’s lives.

- Experience diversity: the most interesting people I’ve met optimize for new experiences. They choose jobs that allow them to do things that they’ve never done before.

- Brand name recognition: it’s not a bad strategy to choose to work for the most well-known company or person in your field. This brand can open many doors for you down the line.

You can optimize for different things at different stages in your life, but you can only optimize for one thing at a time. You might optimize for new experiences when you’re younger, money and recognition when you start having more responsibilities, then impact when you’ve had enough money to not have to worry about it. If you don’t know what you’re optimizing for, optimize for personal growth. Get a skill set that maximizes your options in the future14.

- Personal growth: those who optimize for this choose the job that allows them to learn the most, which in turn maximizes their career option. They might choose a job because it offers mentorship or allows them to work on new, challenging tasks.

Resources

Twitter thread: Advice for people who want to leave a big company to join a startup by Jensen Harris.

How to get rich in tech, guaranteed (Startups and Shit, 2016).

Twitter thread: Joining a startup is not a get-rich-quick scheme by me (shameless plug)

Chapter 2. Machine learning interview process

2.1 Understanding the interviewers’ mindset

To understand the interview process, it’s important to see it from the employers’ perspective. Not only candidates hate the hiring process. Employers hate it too. It’s expensive for companies, stressful for hiring managers, and boring for interviewers.

While a handful of well-known organizations are swamped with resumes, lesser-known companies struggle to attract talent. I keep hearing from small companies that it’s near impossible to compete with offers made by tech giants. After weeks of pulling out all the stops to court a candidate, the company makes an offer only to find out that FAAAM has outbid them. Companies often contract talent agencies that might charge 20-30

The competition for talent is especially brutal in Silicon Valley where the high number of companies per capita makes the odds in candidates’ favor. Recruiters, even those from companies that receive millions of resumes every year like Google17, aggressively court potential candidates even if these candidates aren’t looking. The majority of people who took a new job in 2018 weren’t searching for one18.

Some candidates express a mild annoyance at recruiters’ unsolicited contact. This attitude is often misguided because recruiters are your biggest ally: they work to get you hired. As a candidate, you want to have enough visibility so that recruiters reach out to you.

Every company says that they want to hire the best people. That’s not true. Companies want to hire the best people who can do a reasonable job within time and monetary constraints. If it takes a month and $10K to find candidate A who can do 93

You’d think that when companies hire, they know exactly what they want their new hires to do. Unless it’s an established team with routine tasks, hiring managers can seldom predict with perfect clarity what tasks need to get done or what skills are needed. Sometimes, companies can’t even be sure that they’ll need that person. Sam Altman, chairman of the startup accelerator Y Combinator and co-chairman of OpenAI, advises companies that, in the beginning, “you should only hire when you desperately need to.”

However, because hiring is so competitive and time-consuming, companies can’t afford to wait until they’re desperate. A desperate hire is likely to be a bad one. Sarah Catanzaro, a partner focusing on AI at Amplify Partners, advises her portfolio companies to start hiring when they’re 50

Imagine a startup that has just raised several million dollars and decided that they want to turn their logs into useful features. They think ML can help them, but don’t know how it’d be done. When the recruiter asks them for a job description, they whip up a list of generic ML-related skills and experiences they think might be necessary. Requirements such as “5 years of experience” or “degrees in related fields” are arbitrary and might prevent them from hiring the right candidate.

Tip Job descriptions are for reference. Apply for jobs you like even if you don’t have all the skills and experiences in the job descriptions. Chances are you don’t need them for those jobs.

Engineers start interviewing candidates after one to six months at a new company. New hires begin by shadowing more senior interviewers for a few interviews before doing it on their own, and that’s often all the training they get. Interviewers might have been in your shoes just months ago, and like you, they don’t know everything. Even after years of conducting interviews, I still worry that I’ll make a fool of myself in front of candidates and give them a bad impression of my company.

This lack of training means that even within the same company, interviewers may have different interviewing techniques and different ideas of what a good interview looks like. Rubrics to grade candidates — if in existence at all — are qualitative instead of quantitative (e.g. “candidates show good debugging skills’”).

Hiring managers also aggregate feedback from interviewers differently. Some hiring managers rely on the average feedback from all interviewers. Some rely on the best feedback — they’d prefer a candidate that at least one interviewer is really excited to work with to someone whose general feedback is good but no one is crazy about. Google is an example of a company that values enthusiastic endorsements over uniformly lukewarm reviews.

Some companies, in their aggressive expansion, might hire anyone as long as there’s no reason not to hire them. Other companies might only hire someone if there’s a great reason to hire them.

If you think one interview goes poorly, don’t despair. There are many random variables other than your actual performance that influence the outcome of an interview: the questions asked, other candidates the interviewer has seen before you, after you, the interviewer’s expectation, even the interviewer’s mood. It is, in no way, a reflection of your ability or your self-worth. Companies know that too, and it’s a common practice for companies to invite rejected candidates to interview again after a year or so.

17: Google Automatically Rejects Most Resumes for Common Mistakes You’ve Probably Made Too (Inc., 2018).

18: Your Approach to Hiring Is All Wrong (Peter Cappelli, Harvard Business Review, 2019)

2.1.1 What companies want from candidates

The goal of the interviewing process is for a company to assess:

-

- whether you have the necessary skills and knowledge to do the job

-

- whether they can provide you with a suitable environment to carry out that task.

Companies will be looking at both your technical and non-technical skills.

2.1.1.1 Technical skills

-

- Software engineering. As ML models often require extensive engineering to train and deploy, it’s important to have a good understanding of engineering principles. Aspects of computer science that are more relevant to ML include algorithms, data structures, time/space complexity, and scalability. You should be comfortable with the usual suspects: Python, Jupyter Notebook or Google Colab, NumPy, scikit-learn19, and a deep learning framework. Knowing at least one performance-oriented language such as C++ or Go can come in handy. BestPracticer has an interesting list of engineering skills needed for skills at different levels.

-

- Data cleaning, analytics, and visualization. Data handling is important yet often overlooked in ML education. It’s a huge bonus when a candidate knows how to collect, explore, clean data as well as knowing how to create training datasets. You should be comfortable with dataframe manipulation (pandas, dask) and data visualization (seaborn, altair, matplotlib, etc.). SQL is popular for relational databases and R for data analysis. Familiarity with distributed toolkits like Spark and Hadoop is also very useful.

-

- Machine learning knowledge. You should understand ML beyond citing buzzwords. Ideally, you should be able to explain every architectural choice you make. You might not need this understanding if all you do is clone an existing open-source implementation and it runs flawlessly on your data. But models seldom run flawlessly, so you’d need this understanding to evaluate potential solutions and debug your models.

-

- Domain-specific knowledge. You should have knowledge relevant to the products of the company you’re interviewing for. If it’s in the autonomous vehicle space, you’re probably expected to know computer vision techniques as well as computer vision tasks such as object detection, image segmentation, and motion analysis. If the company builds speech recognition systems, you should know about mel-filterbank features, CTC loss, and common benchmark datasets for the task of speech recognition.

2.1.1.2 Non-technical skills

-

- Analytical thinking, or the ability to solve problems effectively. This involves a step-by-step approach to break down complex problems into manageable components. You might not immediately know how to solve a problem, especially if it’s something you’ve never encountered before, but you should know how to systematically approach it. When hiring for junior roles, employers might value this skill more than anything else. You can teach someone Python in a few weeks, but it takes years to teach someone how to think.

-

- Communication skills. Real-world ML projects involve many different stakeholders from different backgrounds: ML engineers, DevOps engineers, subject matter experts (e.g. doctors, bankers, lawyers), product managers, business leaders. It’s important to communicate technical aspects of your ML models to people who are also involved in the developmental process but don’t necessarily have technical backgrounds.It’s hard to work with someone who can’t explain what they are doing. If you have a brilliant idea but nobody understands it, it’s not brilliant. Keep in mind that there’s a huge difference between fundamentally complex ideas and ideas made complicated by the author’s inability to articulate them.

-

- Experience. Whether you have completed similar tasks in the past and whether you can generalize from those experiences to future tasks. The tech industry is notorious for downplaying experience in favor of ready-to-burn-out twentysomethings. However, in ML where improvements are often made from empirical observations, experience makes all the difference between having a model that performs well on a benchmark dataset and making it work in real-time on real-world data. Experience is different from seniority. There are complacent engineers who’ve worked for decades with less experience than an inquisitive college student.

-

- Leadership. In this context, leadership means the ability to take initiative and complete tasks. If you’re assigned a task, will you be able to do it from start to finish without someone holding your hand? You don’t need to know how to do all components on your own, but you should know what help you need and be proactive in seeking it. This quality can be evaluated based on your past projects. In school or in your previous jobs, did you only do what you were told or did you seize opportunities and take initiative?

The skillset required varies from role to role. See section 1.1 Different machine learning roles for differences among roles.

2.1.1.3 What exactly is culture fit?

There’s one thing that companies look for that isn’t a skill and sometimes a source of contention: culture fit. Some even argue that this is a proxy to create an exclusive culture — managers hire only people who look like them, talk like them, and come from the same background as them.

Some companies have switched the term “culture fit” for terms like “value alignment”. A fit should be aligned to values, not lifestyle, e.g. whether you value constructive criticism, not whether you go out to drink every Sunday afternoon.

For most big companies, because their culture is already established, one new employee is unlikely to change the office dynamic and culture fit boils down to whether you’re someone people would like to work with (e.g. you’re not an asshole, you’re not defensive, you’re a team player). For small organizations, culture fit is more important as companies want people who share their mission, drive, ethics, and work habits.

Value alignment is also about you evaluating whether this is a company you want to work for. Do you believe in their mission and vision? Are their current employees the type you want to be around? Will the company provide a good environment for you to grow? One candidate told me he turned down an offer after being invited to the company’s push-up competition. He didn’t feel like a culture that places so much importance on testosterone-filled activities would be a good fit for him.

2.1.1.4 Junior vs senior roles

Companies might put junior and senior roles on different hiring processes. Junior candidates, who haven’t proved themselves through previous working experience, might have to go through more screenings. Amazon, for example, only requires coding challenges for junior candidates. Senior candidates, however, might be asked more difficult questions. For example, a few companies have told me that they only give the machine learning systems design questions21 to more senior candidates since they don’t think junior candidates would have the context to do those questions well.

A hiring manager at NVIDIA told me: “When you hire senior engineers, you hire for skills. When you hire junior engineers, you hire for attitude.” I’ve heard this sentiment echo in multiple places.

A senior director at a public tech company told me that when he interviews junior candidates, including interns and recent college graduates, he cares more about how they think, how they respond, and how they adapt. A junior candidate with weaker technical skills but is willing to learn might be preferred over another junior candidate with stronger technical skills but a horrible attitude.

2.1.1.5 Do I need a Ph.D. to work in machine learning?

No, you don’t need a Ph.D. to work in machine learning.

Those who think that a Ph.D. is needed often cite job posts for research scientists that list “Ph.D.” as a requirement. First, research scientist roles make up a very small portion of the ML ecosystem. No other roles, including the popular ML engineer, require a Ph.D.

Even for research scientists, there are plenty of false negatives. For example, OpenAI, one of the world’s top AI research labs, lists only two requirements for their research scientist position:

-

- track record of coming up with new ideas in machine learning

-

- past experience in creating high-performance implementations of deep learning algorithms (optional)22. The long list of people who have done amazing work in machine learning but don’t have a Ph.D. includes the current OpenAI CTO, IBM Watson master inventor, PyTorch creator, Keras creator, etc.

Companies know that you don’t need a Ph.D. to do ML research, but still require a Ph.D. because it’s a signal that you’re serious about research. At many companies, the people who screen your resumes aren’t technical and therefore rely on weak signals like Ph.D. to decide whether to pass your resume to the hiring managers.

Engineering roles that require PhDs are the exceptions, not the norm. Some candidates complain that they get rejected by big companies because they don’t have Ph.D.’s. Unless the rejections explicitly say so, don’t confuse correlation with causation. People with PhDs get rejected too.

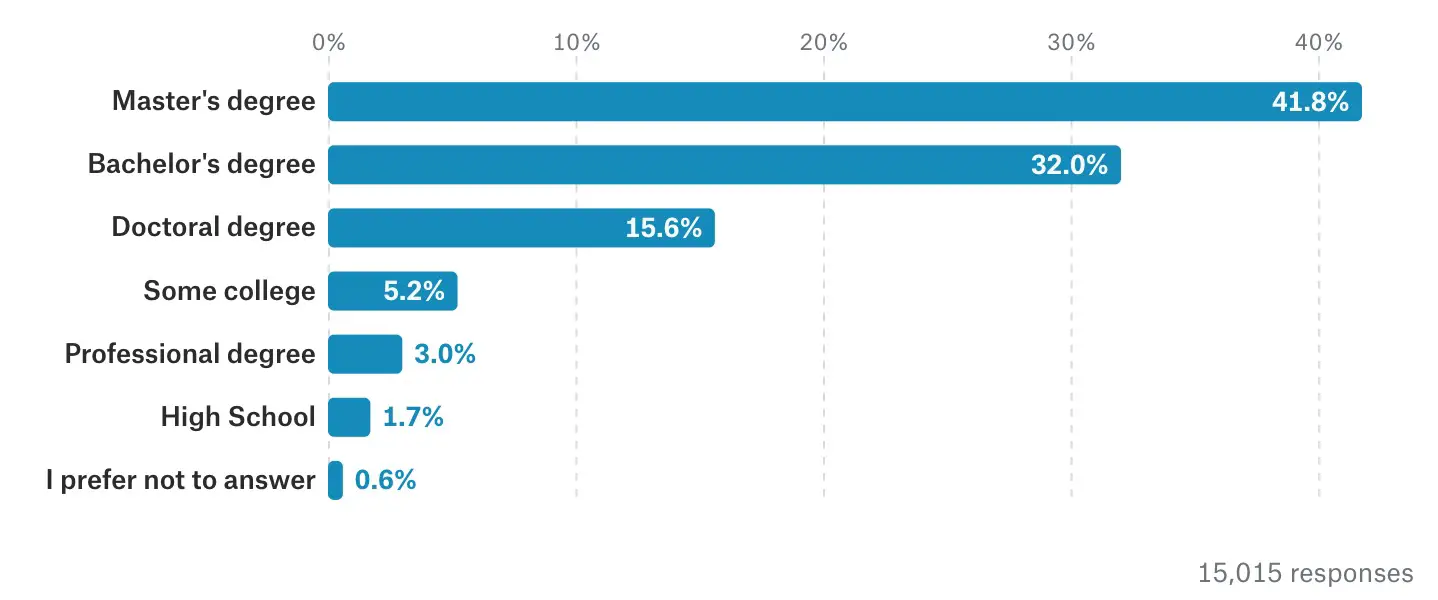

In November 2017, Kaggle surveyed 16,000 of their users and found that 15.6

If you’re serious about research, a Ph.D. is encouraged. However, you shouldn’t let not having a Ph.D. stop you from applying for a job. If you’re interested in a company, build up your portfolio, and apply.

2.1.2 How companies source candidates

To get hired, it might be helpful to put yourself where employers are looking. Out of all possible channels for sourcing candidates, referrals are, by far, the best channel. Recruiters have, for a long time, unanimously agreed on the effectiveness of referrals. Here are some numbers:

-

- Across all jobs, referrals account for 7

Sam Altman, CEO of OpenAI and the former president of Y Combinator, wrote that: “By at least a 10x margin, the best candidate sources I’ve ever seen are friends and friends of friends.”

Lukas Biewald, founder of two machine learning startups Figure Eight and Weights & Biases, analyzed the performance of 129 hires and concluded that:

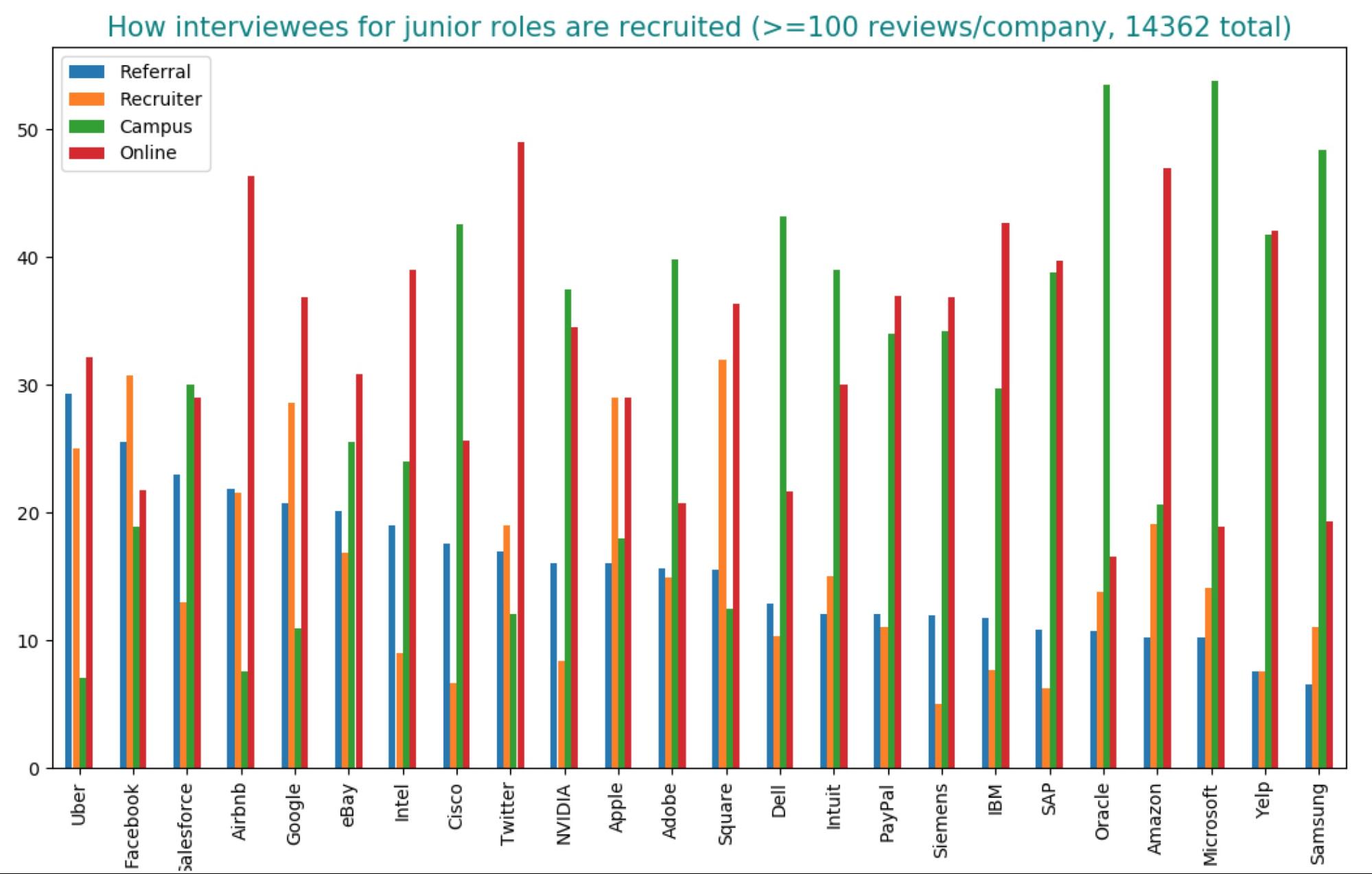

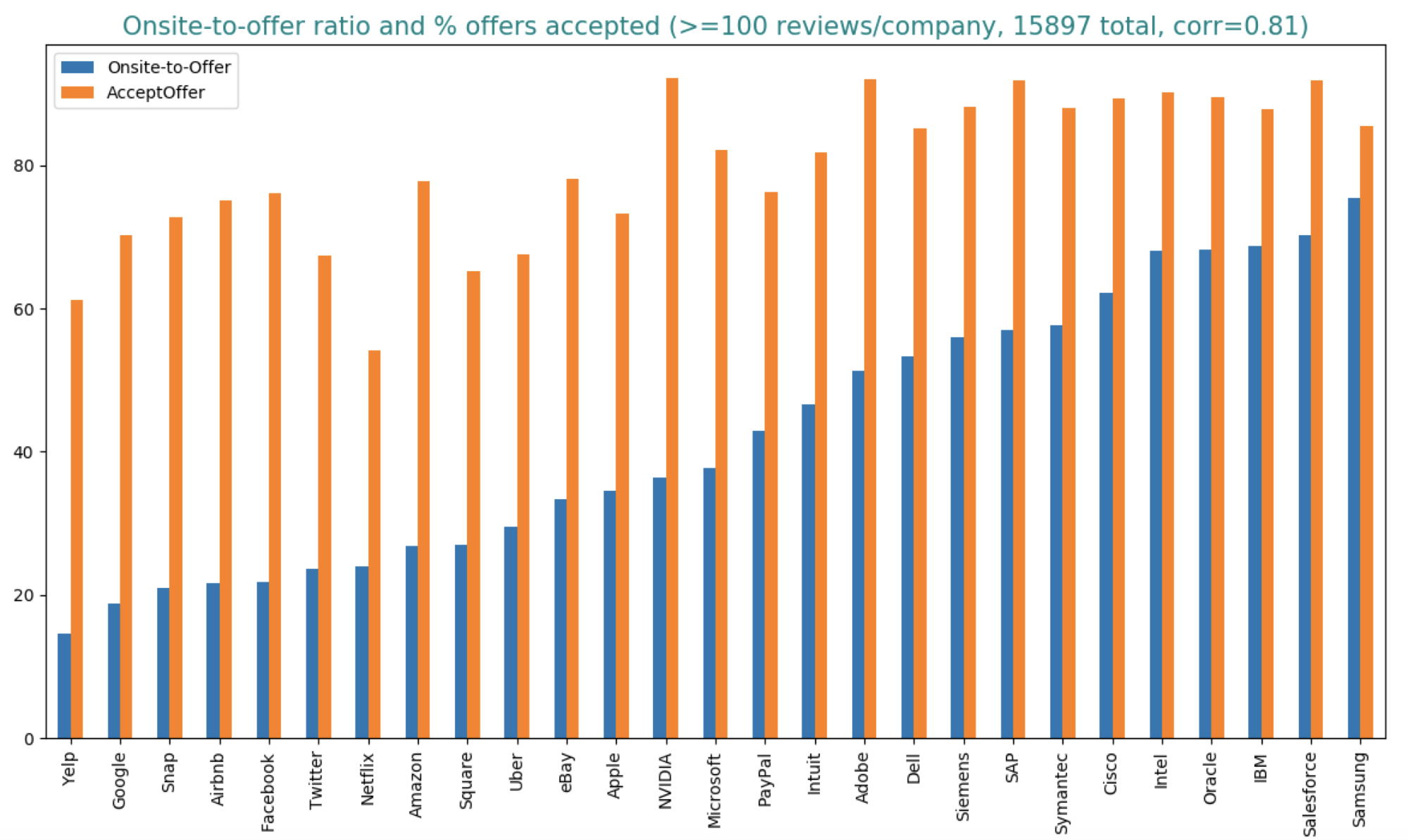

An analysis of 15,897 Glassdoor interview reviews for software engineering related roles at 27 major tech companies showed that: “For junior roles, about 10 – 20

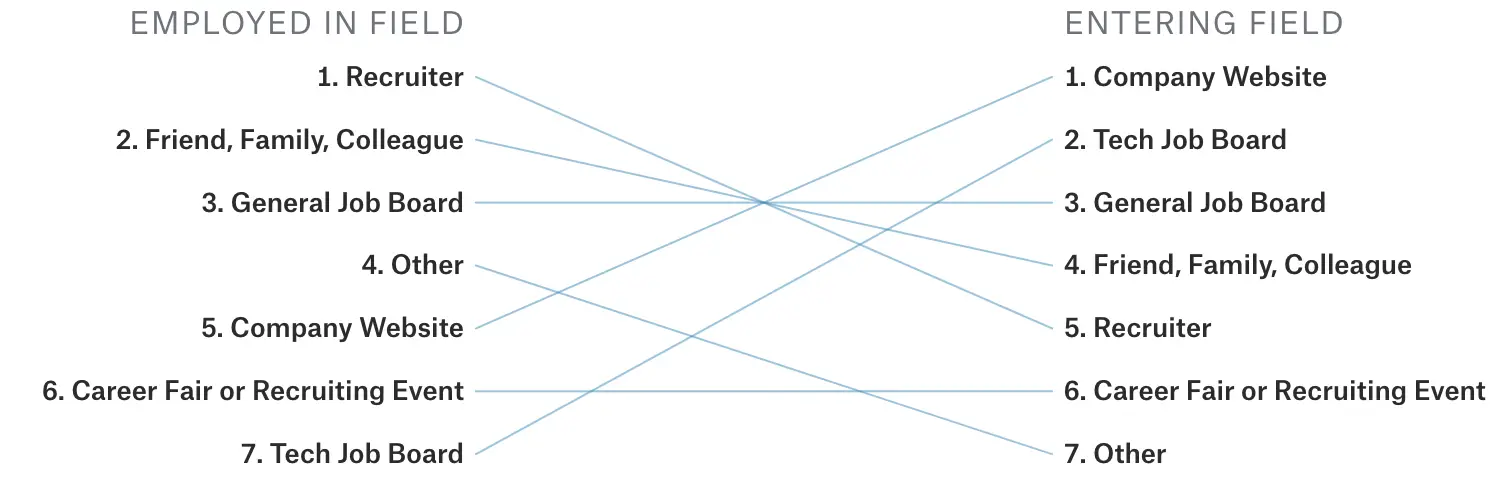

The State of Data Science & Machine Learning survey in 2017 by Kaggle shows that while most people seeking to enter the field look for jobs through company websites and tech job boards, most people already employed in the field got their jobs through recruiters’ outreach or referrals. For junior roles, the biggest source for onsite candidates is campus recruiting. Microsoft and Oracle have more than half of their interviewees recruited through campus events such as career fairs and tech talks. Internet giants like Google, Facebook, and Airbnb rely less on campus recruiting, but it still accounts for between 20 and 30

From the employers’ perspective, targeting their most promising sources can reduce the hiring cost as well as the risk of disastrous hires. It is, therefore, not surprising that the default message to most candidates who submit their resumes through less promising sources like online applications is “Thank you, next.” This process is far from ideal as it creates an exclusive, anti-meritocratic environment. Many qualified people are rejected simply because they don’t go to the right school or don’t have the right network. If you’re one of these statistically unlucky candidates, one thing you can hope for is that you have a set of skills and/or portfolio that attract recruiters. Around 15 to 25

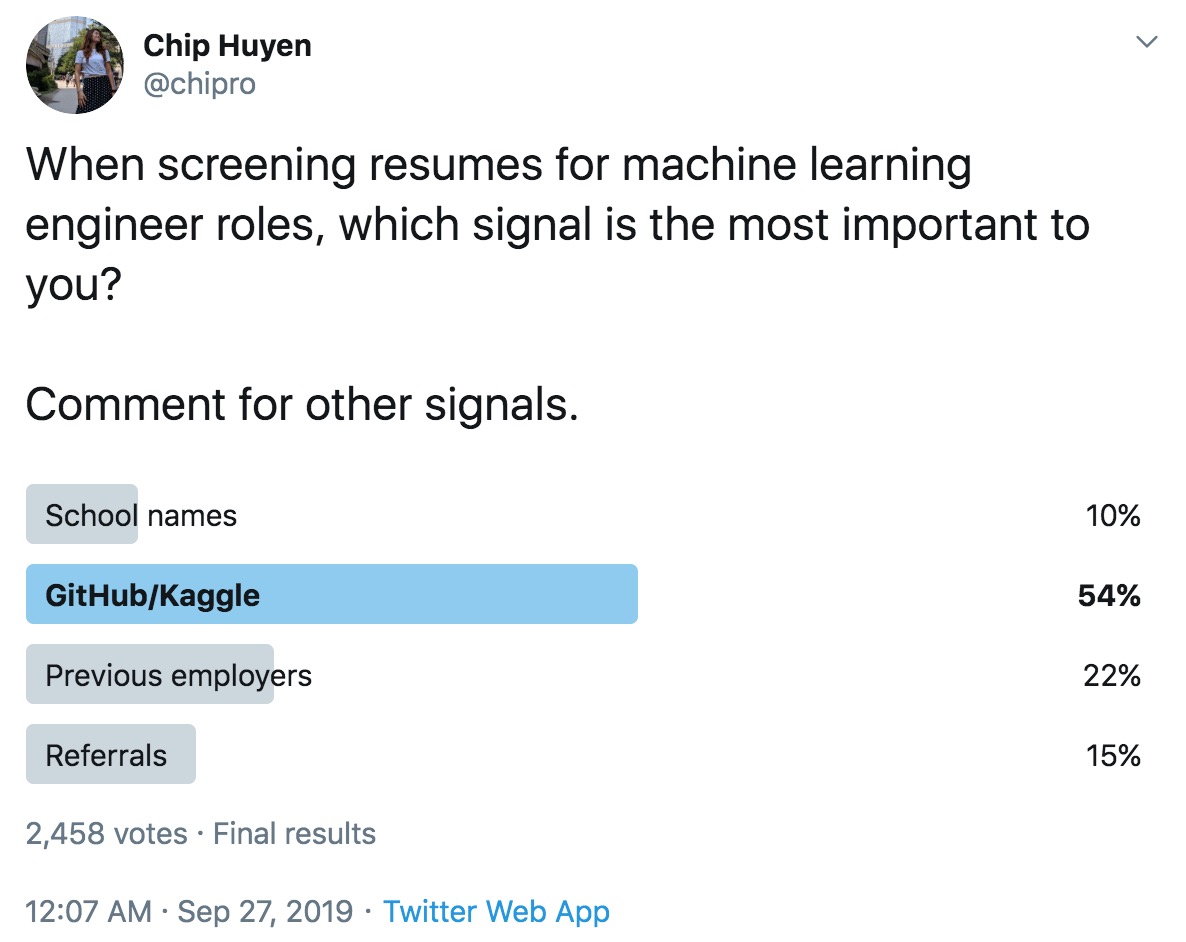

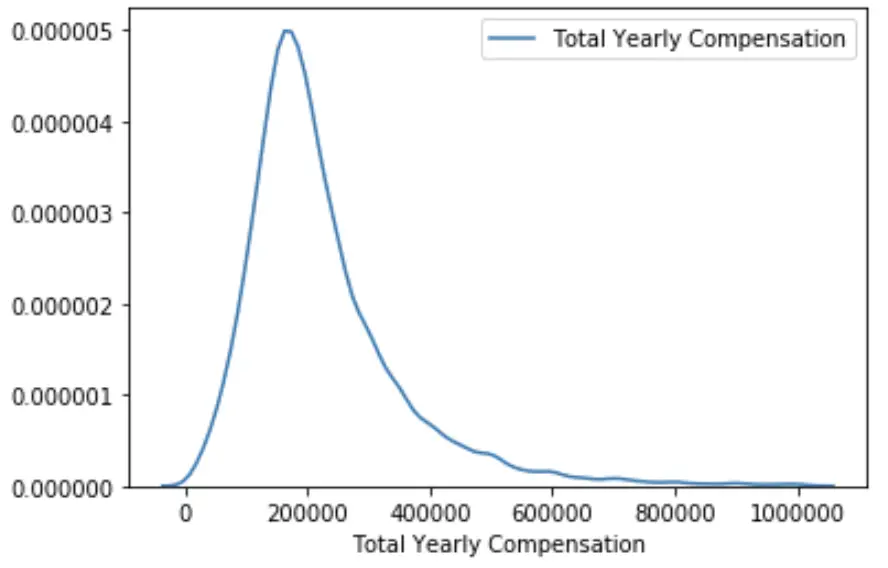

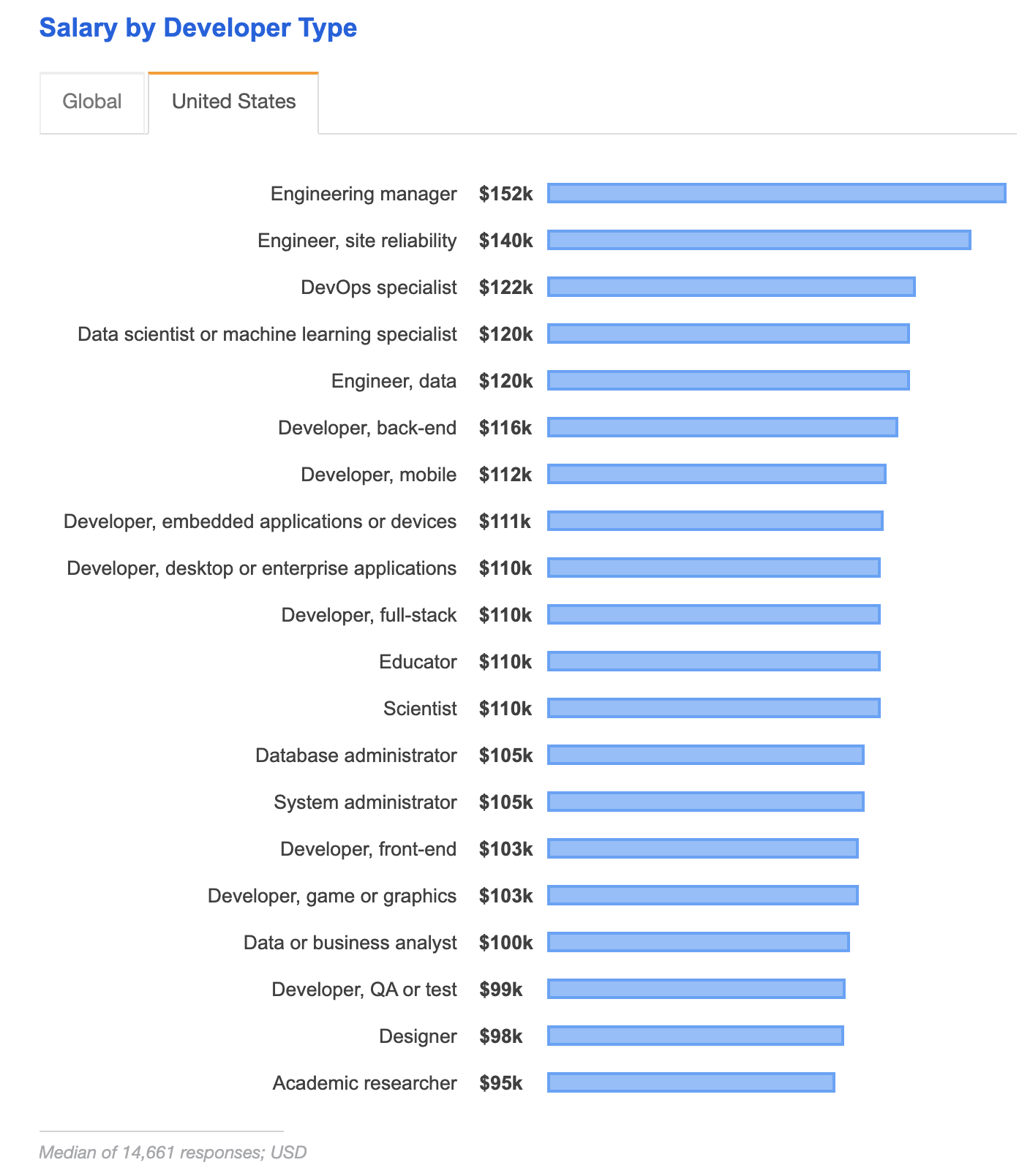

If all else fails, submit your applications and hope for the best. Companies that are the friendliest to online applicants are Twitter, Amazon, and Airbnb with roughly half of their onsite candidates being online applicants. Companies among the most likely to pass on hopeful online applicants are Facebook, Microsoft, and Oracle. Accurately evaluating candidates is very challenging. First, you can only evaluate something as well as your evaluators allow. Companies can only evaluate a candidate to the extent of the interviewers’ knowledge. If your interviewer has a shallow understanding of X, they won’t be able to evaluate your in-depth understanding of X. Many companies, including those who claim to be ML companies, don’t already have a strong in-house ML team to act as good evaluators35. Second, even strong in-house teams don’t always mean strong evaluators. Therefore, companies have to rely on signals to help them predict whether a candidate would be a good fit. As you might have already suspected, pedigrees make for strong signals. It’s not a coincidence that companies like to advertise how many ex-Googlers or ex-Facebookers they have on the payroll. If you’ve worked as a full-time ML engineer at Google, you must have passed its ML interviews and learned good engineering practices from Google. On resumes, college names matter but not much. Their importance is inversely proportional to seniority. If someone, with all the privileges of an elite education, still has no interesting past projects to put on their resume, the fancy college name might even hurt. However, going to a popular engineering school has several benefits. First, given two equally mediocre resumes, one from MIT, the other from a college nobody has ever heard of, the recruiters might be more inclined to give the one from MIT a call. Second, popular engineering colleges give you access to recruiters who hire from campus events. Third, you’ll likely have classmates at big companies who can refer you. If you’re a recent graduate, your college name might matter less than your GPA, which shows your dedication during your studies. Still, your GPA doesn’t matter as much if you have other things to show. I’ve had only one employer asking for my GPA, and it was after I’d got the offer so that they could put it in their database. The strongest signal is past experience, especially experience similar to the job you’re applying for. The experience can be work done at your previous jobs, projects you do independently, or competitions you enter. If you’ve placed highly in Kaggle competitions, made significant contributions to open-source projects, presented papers at top-tier conferences, written in-depth technical blog posts, self-published books, or done any interesting side projects, you should put them online and highlight them in your resume. There are so many things you can do to signal to people that you’re proactive, capable, and willing to work hard. When I asked on Twitter which signal is most important when screening for ML engineering roles, more than 50

The interview pipeline for ML roles evolved out of the tried-and-true software engineering interview pipeline and includes the same components that one can see in a traditional technical interviewing process. There are many people involved in the interviewing process. For hiring managers, it’s crucial to assign each interviewer a set of skills to evaluate, so that different interviewers ask different questions and that collectively, they get a holistic picture of where you’re at with all the skills they care about. One interviewer might ask you about theories, a couple about coding, one about ML systems design. Ideally, the interviewer tells you the skills they want to focus on so that you can tailor your answers to highlight those skills. If they don’t, ask. Recruiters are often encouraged to share with candidates the names of their interviewers. If they don’t, ask. You can look up your interviewers beforehand to learn what they do. It’ll give you a sense of not only what you’ll be doing if you join the company, but also your interviewers’ areas of interest. You should also ask about the team you’re being considered for. At most companies, you interview for a specific team. However, if you apply to companies such as Google and Facebook, you’re matched with a team after you’ve passed. This means that it’s possible to pass their interview process without getting an offer if no team takes you, though it’s rare40. The following list of interview components is long and intimidating, but companies usually use only a subset of them. The number of interviews in each component also varies. Companies might skip any step if they’re confident about your ability. Strong candidates might even be invited directly to onsites without all the previous steps. On the flip side, candidates that need to travel for onsites might be vetted more rigorously beforehand. The entire process can be long and tiring, but it’s long and tiring for every candidate. You don’t have to answer all questions flawlessly. You only need to do better than other candidates. No company expects you to know everything. The field is moving so fast it’s unrealistic to expect any candidate to know all the latest papers and techniques. Given how expensive, time-consuming, and inaccurate the traditional interview process is, companies are experimenting with new interviewing formats. Hiring at a small company is very different from hiring at a big company. One reason is that big companies make a lot of hires, so they need to standardize their process. Small companies refine the process as they go. Another reason is that big companies can afford to occasionally make bad hires, whereas a few bad hires can run a small company into the ground. As a result, processes at smaller companies can adapt to each role and each candidate. Processes at big companies can be rigid and bureaucratic and might involve questions irrelevant to the role or the candidate. The standardization at big companies also means that the process is more hackable — thorough preparation can substantially increase your odds. Another important difference is that big companies can afford to hire specialists, who are great in only a small area. Startups are unpredictable and ever-changing, so they might care less about what you do best and more about whether you can address their most urgent needs. It’s typically much easier to interview for an internship and then get a return offer as a full-time employee than to interview for a full-time position directly. The interview process is a proxy to predict how well you will perform on the job. If you’ve already interned with a team, they know your ability and fit, and therefore might prefer you to an unknown candidate. At major tech companies, intern programs exist to provide a steady, reliable source for full-time talent. An average intern at Facebook makes $8,000/month, almost twice as much as an average American full-time worker43, and Facebook isn’t even the highest bidder44. It’s hard to justify this salary unless it can offset the recruiting cost later. The interview process for interns is less complicated because an internship is less of a commitment. If a company doesn’t like an intern, that intern will be gone in three months. But if they don’t like a full-time employee, firing that person is expensive. The number of full-time positions is subjected to a strict headcount, but the number of interns often isn’t. Even when a company freezes the hiring process, such as to cut costs, they might still hire interns. If your internship doesn’t go terribly wrong and the company is hiring, you’ll likely get an intern-to-full-time offer. At NVIDIA, the majority of full-time offers for new graduates go to their interns. Rachelle Gupta, an ex-recruiter for Google and GitHub, wrote in one of her answers on Quora that: “Ranges [of the intern conversion rates] are between the high 60’s