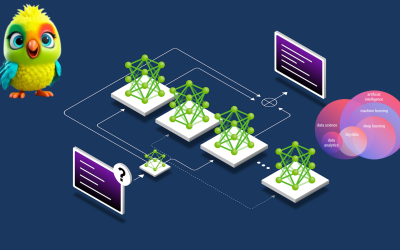

MoE Explained and visualized The Architecture Behind Efficient Large Language Models

What is Mixture of Experts? Mixture of Experts (MoE) is a technique that uses many different sub-models (or “experts”) to improve the quality of LLMs. Two main components define a MoE: Experts – Each FFNN layer now has a set of “experts” of which a subset can be chosen. These “experts” are typically FFNNs themselves. Router or gate…