Everything You Need to Know to Build a Large Language Model (LLM) from Scratch: Architecture, Tokenization, Training & Deployment

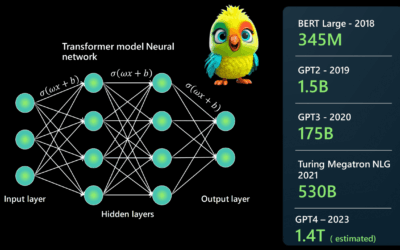

What Are LLMs? LLMs are machine learning models trained on vast amounts of text data. They use transformer architectures, a neural network design introduced in the paper “Attention Is All You Need”. Transformers excel at capturing context and relationships within data, making them ideal for natural language tasks. 1. Architectural Types of Language Models (Expanded with…