The Core of RAG Systems: Embedding Models, Chunking, Vector Databases

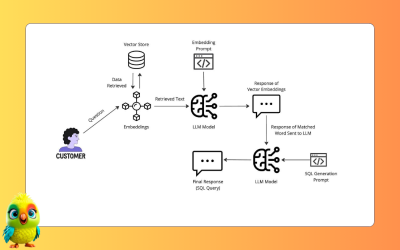

In the age of large language models (LLMs), Retrieval-Augmented Generation (RAG) has emerged as one of the most powerful approaches for building intelligent applications. Whether you’re creating a chatbot, a document assistant, or an enterprise knowledge engine, three pillars make RAG work: embedding models, chunking, and vector databases. This article breaks down what they are,…