Matrices and Matrix Arithmetic Used for Machine Learning and Artificial Intelligence

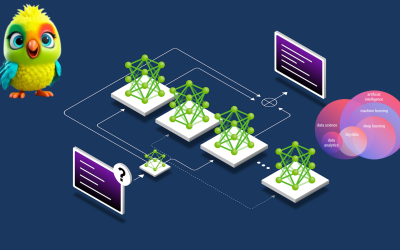

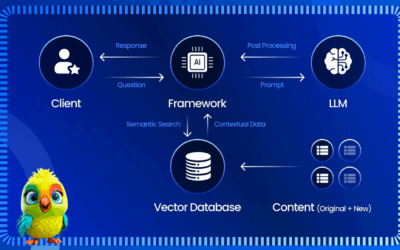

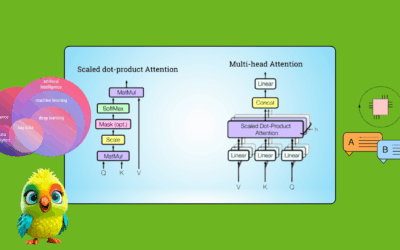

Matrices are omnipresent in math and computer science, both theoretical and applied. They are often used as data structures, such as in graph theory. They are a computational workhorse in many AI fields, such as deep learning, computer vision and natural language processing. Why is that? Why would a rectangular array of numbers, with famously…