The Concept of a Basis in Linear Algebra A Foundational Perspective

The Concept of a Basis in Linear Algebra: A Foundational Perspective

- Introduction: The Quest for Coordinates

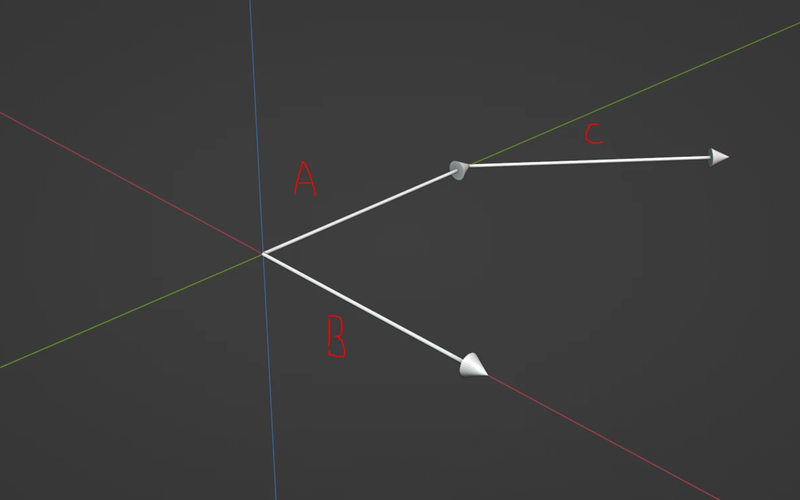

A fundamental theme in linear algebra is the representation of vectors in a way that facilitates computation and understanding. While we often visualize vectors as arrows in space, the true power of linear algebra emerges when we can describe vectors and linear transformations using lists of numbers-coordinates. The bridge between the abstract vector and its concrete coordinate representation is the concept of a basis . A basis provides a frame of reference, a coordinate system, for the vector space. This article will delve into the precise definition, the critical properties of linear independence and spanning, and the profound consequences of establishing a basis.

- Preliminaries: Spanning and Linear Independence

To define a basis, we must first recall two essential concepts. Let \( V \) be a vector space over a field \( \mathbb{F} \) (typically \( \mathbb{R} \) or \( \mathbb{C} \)).

2.1 Linear Combinations and Span

A linear combination of a set of vectors \( \{\mathbf{v}_1, \mathbf{v}_2, \dots, \mathbf{v}_p\} \) in \( V \) is any vector of the form:

\[\mathbf{v} = c_1\mathbf{v}_1 + c_2\mathbf{v}_2 + \dots + c_p\mathbf{v}_p,\]

where \( c_1, c_2, \dots, c_p \in \mathbb{F} \).

The span of this set, denoted \( \operatorname{span}\{\mathbf{v}_1, \dots, \mathbf{v}_p\} \), is the collection of *all* possible linear combinations of those vectors. If \( \operatorname{span}\{\mathbf{v}_1, \dots, \mathbf{v}_p\} = V \), we say that the set spans \( V \). This means every vector in \( V \) can be represented as a linear combination of the \( \mathbf{v}_i \)'s.

2.2 Linear Independence

A set of vectors \( \{\mathbf{v}_1, \mathbf{v}_2, \dots, \mathbf{v}_p\} \) in \( V \) is linearly independent if the vector equation

\[c_1\mathbf{v}_1 + c_2\mathbf{v}_2 + \dots + c_p\mathbf{v}_p =\mathbf{0}\]

has *only* the trivial solution : \( c_1 = c_2 = \dots = c_p = 0 \).

Intuitively, this means no vector in the set is redundant; you cannot build any one of them from a linear combination of the others. If a set is not linearly independent, it is linearly dependent .

- The Formal Definition of a Basis

A basis \( \mathcal{B} \) for a vector space \( V \) is a sequence of vectors \( (\mathbf{b}_1, \mathbf{b}_2, \dots, \mathbf{b}_n) \) in \( V \) that satisfies two, and only two, conditions simultaneously:

- *Spanning: \( \operatorname{span}(\mathcal{B}) = V \). (The set is large enough to generate the entire space).

- *Linear Independence: The set \( \{\mathbf{b}_1, \mathbf{b}_2, \dots, \mathbf{b}_n\} \) is linearly independent. (The set has no redundant vectors).

This combination is the "Goldilocks" condition: a basis is a spanning set that is as small as possible. Adding another vector would break linear independence; removing one would break the spanning property.

Theorem 3.1 (Unique Representation): A set \( \mathcal{B} = \{\mathbf{b}_1, \dots, \mathbf{b}_n\} \) is a basis for \( V \) if and only if every vector \( \mathbf{v} \in V \) can be written in one and only one way as a linear combination of the vectors in \( \mathcal{B} \).

*Proof:*

(⇒) Suppose \( \mathcal{B} \) is a basis. Since it spans \( V \), for any \( \mathbf{v} \in V \), there exist scalars \( c_1, \dots, c_n \) such that \( \mathbf{v} = \sum_{i=1}^n c_i \mathbf{b}_i \). Suppose there is another representation \( \mathbf{v} = \sum_{i=1}^n d_i \mathbf{b}_i \). Subtracting these two equations gives:

\[\mathbf{0} = \mathbf{v} - \mathbf{v} = \sum_{i=1}^n (c_i - d_i)\mathbf{b}_i.\]

By the linear independence of \( \mathcal{B} \), it must be that \( c_i - d_i = 0 \) for all \( i \), so \( c_i = d_i \). Hence, the representation is unique.

(⇐) Suppose every \( \mathbf{v} \in V \) has a unique representation as a linear combination of the vectors in \( \mathcal{B} \). This immediately implies \( \mathcal{B} \) spans \( V \). To show linear independence, consider the representation of the zero vector: \( \mathbf{0} = \sum_{i=1}^n 0 \cdot \mathbf{b}_i \). Since the representation is unique, this is the *only* representation. Therefore, the equation \( \mathbf{0} = \sum_{i=1}^n c_i \mathbf{b}_i \) forces \( c_i = 0 \) for all \( i \), proving linear independence. ∎

The scalars \( c_1, c_2, \dots, c_n \) in the unique representation \( \mathbf{v} = c_1\mathbf{b}_1 + \dots + c_n\mathbf{b}_n \) are called the coordinates of \( \mathbf{v} \) relative to the basis \( \mathcal{B} \) . We often write them as a column vector:

\[[\mathbf{v}]_{\mathcal{B}} = \begin{bmatrix} c_1 \\ c_2 \\ \vdots \\ c_n \end{bmatrix}.\]

- Standard Examples and Non-Examples

Example 4.1: The Standard Basis for \( \mathbb{R}^n \)

The most canonical example is the standard basis for \( \mathbb{R}^n \), denoted \( \mathcal{E} = \{\mathbf{e}_1, \mathbf{e}_2, \dots, \mathbf{e}_n\} \), where:

\[\mathbf{e}_1 = \begin{bmatrix} 1 \\ 0 \\ \vdots \\ 0 \end{bmatrix},\quad\mathbf{e}_2 = \begin{bmatrix} 0 \\ 1 \\ \vdots \\ 0\end{bmatrix}, \quad \dots, \quad\mathbf{e}_n = \begin{bmatrix} 0 \\ 0 \\ \vdots \\ 1 \end{bmatrix}.\]

* Spanning: Any vector \( \mathbf{v} = (v_1, v_2, \dots, v_n)^T \) can be written as \( \mathbf{v} = v_1\mathbf{e}_1 + v_2\mathbf{e}_2 + \dots + v_n\mathbf{e}_n \).

* Linear Independence: The equation \( c_1\mathbf{e}_1 + \dots + c_n\mathbf{e}_n = \mathbf{0} \) implies \( (c_1, c_2, \dots, c_n)^T = (0, 0, \dots, 0)^T \), so all \( c_i = 0 \).

The coordinates of \( \mathbf{v} \) relative to \( \mathcal{E} \) are just its standard components: \( [\mathbf{v}]_{\mathcal{E}} = \mathbf{v} \).

Example 4.2: A Non-Standard Basis for \( \mathbb{R}^2 \)

Consider the set \( \mathcal{B} = \left\{ \begin{bmatrix} 1 \\ 1 \end{bmatrix}, \begin{bmatrix} 1 \\ -1 \end{bmatrix} \right\} \).

* Linear Independence? Check if \( c_1 \begin{bmatrix} 1 \\ 1 \end{bmatrix} + c_2 \begin{bmatrix} 1 \\ -1 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \end{bmatrix} \). This gives the system:

\[\begin{cases}c_1 + c_2 = 0 \\c_1 - c_2 = 0\end{cases}\]

The only solution is \( c_1 = c_2 = 0 \). So, the set is linearly independent.

* Spanning? For any vector \( \begin{bmatrix} x \\ y \end{bmatrix} \in \mathbb{R}^2 \), we must find \( c_1, c_2 \) such that:

\[c_1 \begin{bmatrix} 1 \\ 1 \end{bmatrix} + c_2 \begin{bmatrix} 1 \\ -1 \end{bmatrix} = \begin{bmatrix} x \\ y \end{bmatrix}.\]

This is the same system: \( c_1 + c_2 = x \), \( c_1 - c_2 = y \). Solving gives \( c_1 = \frac{x+y}{2} \), \( c_2 = \frac{x-y}{2} \). A solution exists for all \( x, y \), so \( \mathcal{B} \) spans \( \mathbb{R}^2 \).

Thus, \( \mathcal{B} \) is a basis. The coordinates of \( \begin{bmatrix} 3 \\ 5 \end{bmatrix} \) in this basis are \( [\frac{3+5}{2}, \frac{3-5}{2}]^T = [4, -1]^T \).

Non-Example 4.3: A Set that Spans but is Not a Basis

In \( \mathbb{R}^2 \), consider \( S = \left\{ \begin{bmatrix} 1 \\ 0 \end{bmatrix}, \begin{bmatrix} 0 \\ 1 \end{bmatrix}, \begin{bmatrix} 1 \\ 1 \end{bmatrix} \right\} \). This set spans \( \mathbb{R}^2 \) (the first two vectors alone do), but it is linearly dependent because \( \begin{bmatrix} 1 \\ 1 \end{bmatrix} = \begin{bmatrix} 1 \\ 0 \end{bmatrix} + \begin{bmatrix} 0 \\ 1 \end{bmatrix} \). The representation is not unique; e.g., \( \begin{bmatrix} 1 \\ 1 \end{bmatrix} \) can be written as itself, or as the sum of the first two basis vectors.

Non-Example 4.4: A Set that is Linearly Independent but Not a Basis

In \( \mathbb{R}^3 \), consider \( S = \left\{ \begin{bmatrix} 1 \\ 0 \\ 0 \end{bmatrix}, \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} \right\} \). This set is linearly independent, but it does not span \( \mathbb{R}^3 \). For example, \( \begin{bmatrix} 0 \\ 0 \\ 1 \end{bmatrix} \) is not in the span of \( S \).

- The Dimension Theorem and its Implications

A profound result concerning bases is that for a given vector space \( V \) (that is not the trivial space \(\{\mathbf{0}\}\)), if a finite basis exists, then every basis for \( V \) has the same number of elements . This number is called the dimension of \( V \), denoted \( \dim(V) \).

Theorem 5.1 (The Steinitz Exchange Lemma - consequence): Let \( V \) be a finite-dimensional vector space. Any linearly independent set in \( V \) can be extended to form a basis for \( V \), and any spanning set for \( V \) can be reduced to form a basis for \( V \).

This theorem is constructive. To find a basis from a spanning set, one can use Gaussian elimination on the matrix whose columns are the spanning vectors. The pivot columns form a basis for the column space.

Example 5.2: Find a basis for the subspace \( W \) of \( \mathbb{R}^3 \) spanned by:

\[\mathbf{v}_1 = \begin{bmatrix} 1 \\ 2 \\ 3 \end{bmatrix}, \quad\mathbf{v}_2 = \begin{bmatrix} 2 \\ 4 \\ 6 \end{bmatrix}, \quad\mathbf{v}_3 = \begin{bmatrix} 1 \\ 1 \\ 1 \end{bmatrix}, \quad\mathbf{v}_4 = \begin{bmatrix} 3 \\ 5 \\ 7 \end{bmatrix}.\]

Form the matrix \( A = \begin{bmatrix} \mathbf{v}_1 & \mathbf{v}_2 & \mathbf{v}_3 & \mathbf{v}_4 \end{bmatrix} \) and row reduce:

\[A = \begin{bmatrix}1 & 2 & 1 & 3 \\2 & 4 & 1 & 5 \\3 & 6 & 1 & 7\end{bmatrix} \sim\begin{bmatrix}1 & 2 & 1 & 3 \\0 & 0 & -1 & -1 \\0 & 0 & -2 & -2\end{bmatrix} \sim\begin{bmatrix}1 & 2 & 0 & 2 \\0 & 0 & 1 & 1 \\0 & 0 & 0 & 0\end{bmatrix}.\]

The pivot columns are columns 1 and 3. Therefore, \( \{\mathbf{v}_1, \mathbf{v}_3\} \) is a basis for \( W \), and \( \dim(W) = 2 \).

- Bases for Abstract Vector Spaces

The power of the basis concept extends far beyond \( \mathbb{R}^n \).

Example 6.1: The Vector Space of Polynomials \( P_n \)

Let \( P_n \) be the vector space of all polynomials of degree at most \( n \). A natural basis is the monomial basis :

\[\mathcal{B} = \{1, x, x^2, \dots, x^n\}.\]

* Spanning: By definition, any polynomial \( p(x) = a_0 + a_1x + a_2x^2 + \dots + a_nx^n \) is a linear combination of these basis vectors.

* Linear Independence: If \( a_0\cdot 1 + a_1 x + \dots + a_n x^n = 0 \) (the zero polynomial), then the fundamental theorem of algebra (or comparing coefficients) forces \( a_0 = a_1 = \dots = a_n = 0 \).

Thus, \( \dim(P_n) = n+1 \). The coordinates of a polynomial relative to this basis are simply its coefficients: \( [p(x)]_{\mathcal{B}} = [a_0, a_1, \dots, a_n]^T \).

Example 6.2: A Different Basis for \( P_2 \)

Consider \( \mathcal{C} = \{1, 1+x, 1+x+x^2\} \). This is also a basis for \( P_2 \). Let's find the coordinates of \( p(x) = 2 + 3x + 4x^2 \) relative to \( \mathcal{C} \). We need scalars \( c_1, c_2, c_3 \) such that:

\[c_1(1) + c_2(1+x) + c_3(1+x+x^2) = 2 + 3x + 4x^2.\]

Expanding: \( (c_1 + c_2 + c_3) + (c_2 + c_3)x + (c_3)x^2 = 2 + 3x + 4x^2 \). This gives the system:

\[\begin{cases}c_1 + c_2 + c_3 = 2 \\c_2 + c_3 = 3 \\c_3 = 4\end{cases}\]

Solving from the bottom up: \( c_3 = 4 \), then \( c_2 = 3 - 4 = -1 \), then \( c_1 = 2 - (-1) - 4 = -1 \). So, \( [p(x)]_{\mathcal{C}} = \begin{bmatrix} -1 \\ -1 \\ 4 \end{bmatrix} \). This illustrates that the coordinate representation of a vector is heavily dependent on the chosen basis.

- Conclusion: The Significance of a Basis

The choice of a basis \( \mathcal{B} \) for a vector space \( V \) establishes an isomorphism (a structure-preserving bijection) between \( V \) and \( \mathbb{F}^n \), where \( n = \dim(V) \). This isomorphism is given by the coordinate map:

\(\phi_{\mathcal{B}}: V \to \mathbb{F}^n, \quad \phi_{\mathcal{B}}(\mathbf{v}) = [\mathbf{v}]_{\mathcal{B}}.\)

This map allows us to translate abstract vector space operations (vector addition, scalar multiplication) into concrete arithmetic in \( \mathbb{F}^n \). Furthermore, it allows us to represent linear transformations \( T: V \to W \) as matrices. If \( \dim(V)=n \) and \( \dim(W)=m \), and we choose bases \( \mathcal{B} \) and \( \mathcal{C} \) for \( V \) and \( W \) respectively, then \( T \) is represented by an \( m \times n \) matrix \( [T]_{\mathcal{C}}^{\mathcal{B}} \) defined by:

\[[T(\mathbf{v})]_{\mathcal{C}} = [T]_{\mathcal{C}}^{\mathcal{B}}[\mathbf{v}]_{\mathcal{B}} \quad \text{for all } \mathbf{v} \in V.\]

In essence, a basis is the fundamental coordinate system that unlocks the computational power of linear algebra, transforming geometric and abstract problems into solvable algebraic ones. Its properties of spanning and linear independence ensure that this coordinate system is both comprehensive and efficient.