A Comprehensive Analysis of the Sigmoid Family of Activation Functions

Introduction

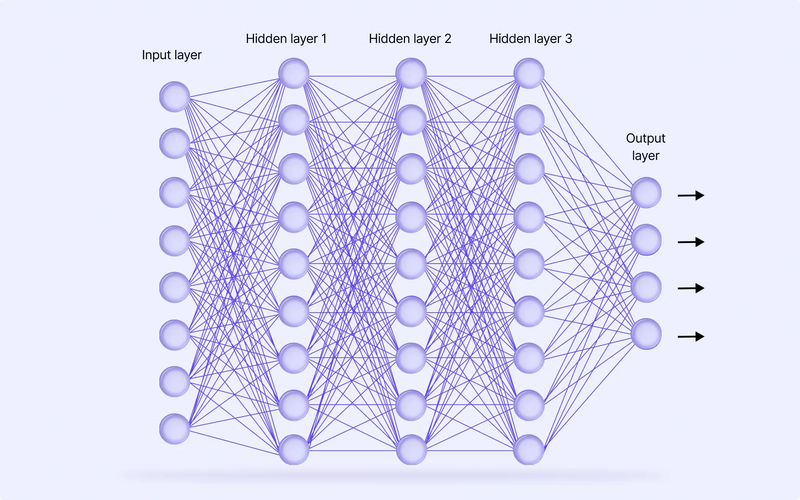

The Sigmoid family represents a critical evolutionary step in the development of neural networks. Moving beyond the non-differentiable classical functions, these S-shaped (sigmoidal) functions introduced the two essential properties needed for trainable, multi-layer networks: differentiability and non-linearity . This article provides a deep dive into the most prominent members of this family-Sigmoid, Tanh, and their computationally efficient "Hard" variants-exploring their mathematical foundations, deriving their gradients, and evaluating their modern relevance.

- Sigmoid / Logistic Activation Function

The Sigmoid function was the cornerstone of early neural networks, particularly for binary classification tasks. It smoothly squashes any real number into a tight range between 0 and 1, making it interpretable as a probability.

Formula:

\[ f(x) = \frac{1}{1 + e^{-x}} \]

Range: (0, 1)

Derivation of the Gradient

The derivation of the Sigmoid's derivative is elegant and reveals why it suffers from the vanishing gradient problem.

Let \( f(x) = \frac{1}{1 + e^{-x}} \).

We can find the derivative using the chain rule. First, we rewrite the function:

\[ f(x) = (1 + e^{-x})^{-1} \]

Now, we differentiate:

\[ f'(x) = -1 \cdot (1 + e^{-x})^{-2} \cdot \frac{d}{dx}(1 + e^{-x}) \]

\[ f'(x) = -(1 + e^{-x})^{-2} \cdot (-e^{-x}) \]

\[ f'(x) = \frac{e^{-x}}{(1 + e^{-x})^{2}} \]

This is the derivative, but we can express it in a more insightful form. We can rearrange it in terms of the original function \( f(x) \):

\[ f'(x) = \frac{e^{-x}}{(1 + e^{-x})^{2}} = \frac{1}{1 + e^{-x}} \cdot \frac{e^{-x}}{1 + e^{-x}} \]

Notice that \( \frac{e^{-x}}{1 + e^{-x}} = 1 - \frac{1}{1 + e^{-x}} \). Therefore:

\[ f'(x) = f(x) \cdot (1 - f(x)) \]

Final Derivative:

\[ f'(x) = f(x)(1 - f(x)) \]

Analysis in Point Form

* Advantages:

* Smooth and Differentiable: Its smooth curve provides a well-defined gradient everywhere, enabling the use of backpropagation.

* Interpretable Output: The output range (0, 1) is ideal for representing probabilities, making it a natural choice for the output layer in binary classification.

* Monotonic: The function is always increasing, which simplifies the relationship between input and output.

* Disadvantages:

* Vanishing Gradients: This is its fatal flaw. From the derivative \( f'(x) = f(x)(1 - f(x)) \), we see that for very high or very low inputs (where \( f(x) \) saturates near 0 or 1), the derivative approaches zero. This kills the gradient during backpropagation, halting learning in deep networks.

* Non-Zero-Centered: The output is always positive. This causes the gradients for weights to be either all positive or all negative, leading to inefficient "zig-zag" dynamics during gradient descent.

* Computationally Expensive: The exponentiation (\( e^{-x} \)) operation is relatively slow compared to simpler functions.

* Modern Use Case:

* Output layer for binary classification where the network must output a probability. It has been largely replaced by ReLU and its variants in hidden layers due to the vanishing gradient problem.

- Tanh (Hyperbolic Tangent) Activation Function

The Tanh function is a zero-centered version of the sigmoid. It squashes a real-valued number to a range between -1 and 1.

Formula:

\[ f(x) = \tanh(x) = \frac{e^{x} - e^{-x}}{e^{x} + e^{-x}} = \frac{2}{1 + e^{-2x}} - 1 \]

Range: (-1, 1)

Derivation of the Gradient

The derivative of Tanh can be found using the quotient rule or by recognizing its relationship to hyperbolic functions. We'll use the quotient rule.

Let \( f(x) = \frac{\sinh(x)}{\cosh(x)} \), where \( \sinh(x) = \frac{e^x - e^{-x}}{2} \) and \( \cosh(x) = \frac{e^x + e^{-x}}{2} \).

Using the quotient rule: \( f'(x) = \frac{\cosh(x) \cdot \cosh(x) - \sinh(x) \cdot \sinh(x)}{\cosh^2(x)} \)

This simplifies using the hyperbolic identity \( \cosh^2(x) - \sinh^2(x) = 1 \):

\[ f'(x) = \frac{1}{\cosh^2(x)} = \text{sech}^2(x) \]

More commonly, it is expressed in terms of the original \( \tanh(x) \) function. From the identity:

\[ 1 - \tanh^2(x) = \text{sech}^2(x) \]

Therefore, the derivative is:

\[ f'(x) = 1 - [\tanh(x)]^2 = 1 - f(x)^2 \]

Final Derivative:

\[ f'(x) = 1 - f(x)^2 \]

Analysis in Point Form

* Advantages:

* Zero-Centered: The output range (-1, 1) is centered around zero. This is a major improvement over the sigmoid, as it leads to faster convergence during training by preventing the zig-zag dynamics in gradient updates.

* Smooth and Differentiable: Like the sigmoid, it is well-suited for gradient-based optimization.

* Disadvantages:

* Vanishing Gradients: It still suffers from the vanishing gradient problem. For very large positive or negative inputs, the function saturates to ±1, and the derivative \( 1 - f(x)^2 \) approaches zero.

* Computationally Expensive: It still requires exponentiation operations.

* Modern Use Case:

* It is generally preferred over the sigmoid for hidden layers in networks where zero-centered data is beneficial. However, for very deep networks, it has still been superseded by ReLU due to the persistent vanishing gradient issue.

- Hard Sigmoid & Hard Tanh

These are piecewise linear approximations of their smooth counterparts. They are designed to capture the general shape of the sigmoid/tanh functions while being much faster to compute, which is crucial for inference on low-power devices.

Hard Sigmoid Formula:

\[ f(x) = \begin{cases} 0 & x < -2.5 \\ 1 & x > 2.5 \\ 0.2x + 0.5 & \text{otherwise} \end{cases} \]

Hard Tanh Formula:

\[ f(x) = \begin{cases} -1 & x < -1 \\ 1 & x > 1 \\ x & \text{otherwise} \end{cases} \]

Derivation of the Gradient

The derivatives are straightforward as these are piecewise linear functions.

Hard Sigmoid Derivative:

\[ f'(x) = \begin{cases} 0 & x < -2.5 \\ 0 & x > 2.5 \\ 0.2 & \text{otherwise} \end{cases} \]

Hard Tanh Derivative:

\[ f'(x) = \begin{cases} 0 & |x| > 1 \\ 1 & |x| < 1 \end{cases} \]

(The derivative at the points \( x = -1, 1, -2.5, 2.5 \) is technically undefined, but is typically treated as 0 in practice.)

Analysis in Point Form

* Advantages:

* Computational Efficiency: They replace expensive exponentiation operations with simple comparisons and linear functions, leading to significant speed-ups, especially in specialized hardware.

* Mitigated Vanishing Gradients: In their linear region, they have a constant, non-zero gradient (0.2 for Hard Sigmoid, 1 for Hard Tanh), which helps gradients flow better than their saturating counterparts.

* Disadvantages:

* Non-Differentiable at Points: The "kinks" in the piecewise function mean the derivative is undefined at specific points (e.g., \( x = -1, 1 \) for Hard Tanh). This is typically handled by using a subgradient during backpropagation.

* Reduced Expressivity: The approximation is less smooth than the original functions, which can theoretically limit the network's ability to model very fine, smooth details in the data.

* Modern Use Case:

* Efficient Inference: They are primarily used in production systems and on mobile/edge devices where computational resources and latency are critical constraints. They are common in quantized and low-precision neural networks.

Conclusion

The Sigmoid family bridged a crucial gap between the non-differentiable classical functions and the modern era of deep learning. While the Sigmoid and Tanh functions enabled the first trainable multi-layer networks, their susceptibility to vanishing gradients limited the depth and performance of these early models. The development of Hard variants addressed the computational cost, making neural networks more practical for deployment.

However, the widespread adoption of the ReLU family marked a paradigm shift, as its non-saturating nature largely solved the vanishing gradient problem for positive inputs, enabling the training of vastly deeper and more powerful networks that define modern deep learning. Today, the Sigmoid family's role is specialized: Tanh remains a strong candidate for certain hidden layers, Sigmoid is the classic choice for probability output, and their Hard variants are essential tools for efficient machine learning at the edge.